General Introduction

Pieces-OS is an open source project that aims to reverse and convert the GRPC streams of Pieces-OS into a standard OpenAI API interface. The project is developed by Nekohy and is open source under GPL-3.0 for learning and communication, not for commercial use. The project provides a variety of model compatibility and support for Vercel one-click deployment.

reverse direction pieces , one minute free deployment of API interfaces supporting Claude, GPT, Gemini models. Providego versionThe

Function List

- GRPC flow reversal: Converts GRPC streams from Pieces-OS to the standard OpenAI interface.

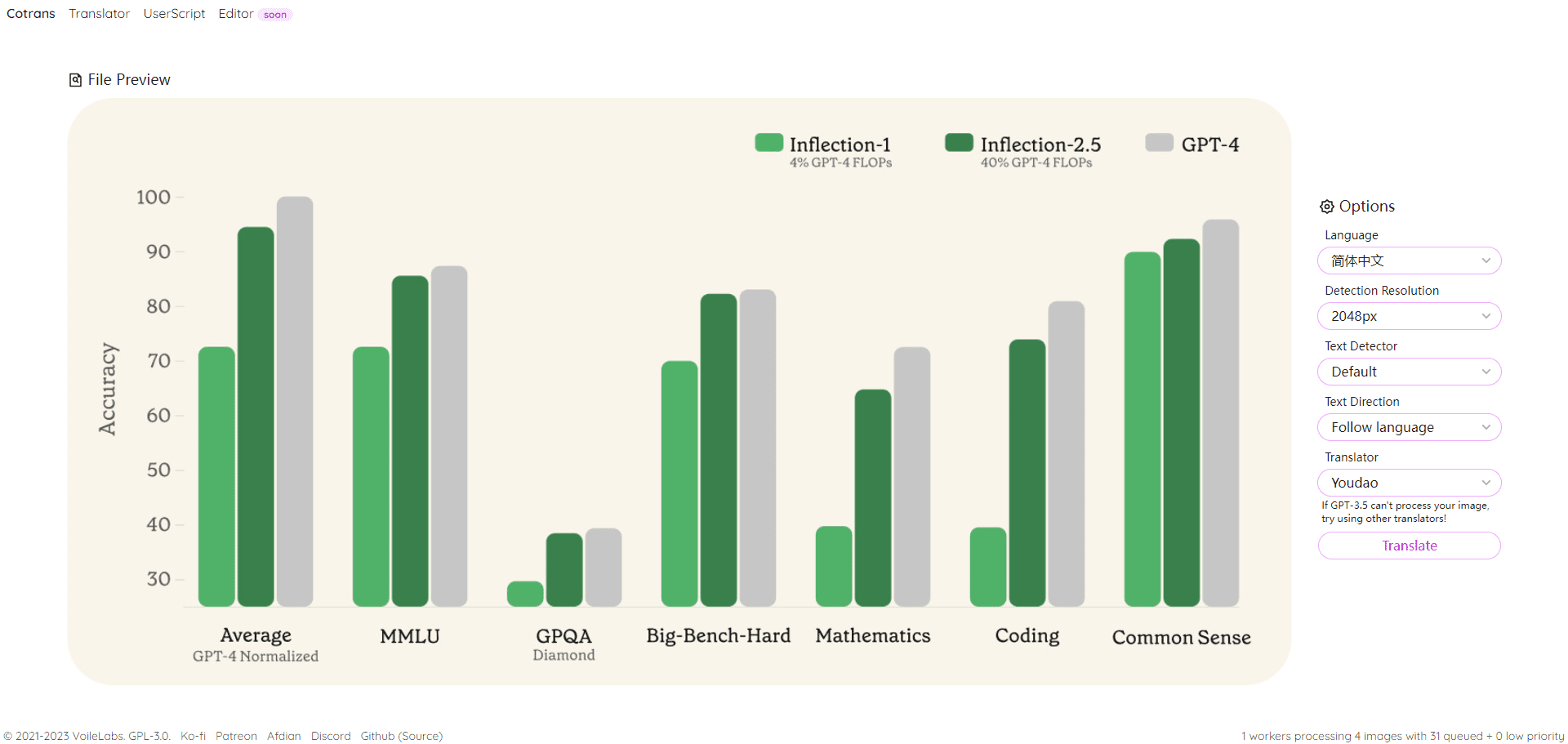

- Multi-model support: Compatible Claude series, GPT series, Gemini series and many other models.

- One-Click Deployment: Support One-click deployment of the Vercel platform, easy for users to build quickly.

- Cloud Model Configuration: Provide profiles of cloud models so that users can extract and use different models as needed.

- API Request Management: Manage prefix paths, keys, retries, etc. for API requests by configuring environment variables.

Using Help

Installation process

- cloning project: Use

git clonecommand to clone the project locally.git clone https://github.com/Nekohy/pieces-os.git - Installation of dependencies: Go to the project directory and install

package.jsonDependency libraries defined in thecd pieces-os npm install - triggering program: Implementation

node index.jsStart the program.node index.js

Usage Process

- Getting a list of models: Get the list of available models with the following command.

curl --request GET 'http://127.0.0.1:8787/v1/models' --header 'Content-Type: application/json' - Send Request: Use the following command to send a chat request.

curl --request POST 'http://127.0.0.1:8787/v1/chat/completions' --header 'Content-Type: application/json' --data '{ "messages": [ { "role": "user", "content": "你好!" } ], "model": "gpt-4o", "stream": true }'

Environment variable configuration

- API_PREFIX: Prefix path for API requests, default value is

'/'The - API_KEY: The key for the API request, defaults to an empty string.

- MAX_RETRY_COUNT: Maximum number of retries, defaults to

3The - RETRY_DELAY: retry delay time in milliseconds, default value is

5000(5 seconds). - PORT: The port on which the service listens, the default value is

8787The

Model Configuration

The project provides profiles for multiple models cloud_model.json, users can extract and use different models as needed. For example:

- Claude Series::

claude-3-5-sonnet@20240620,claude-3-haiku@20240307etc. - GPT Series::

gpt-3.5-turbo,gpt-4,gpt-4-turboetc. - Gemini Series::

gemini-1.5-flash,gemini-1.5-proetc.

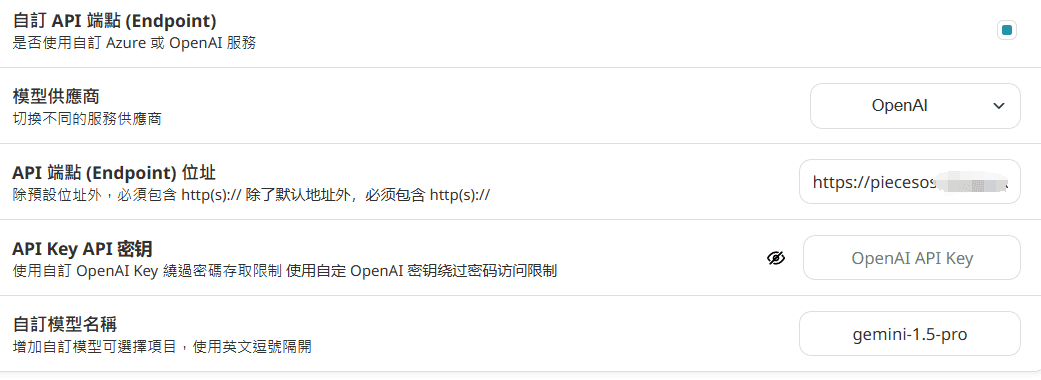

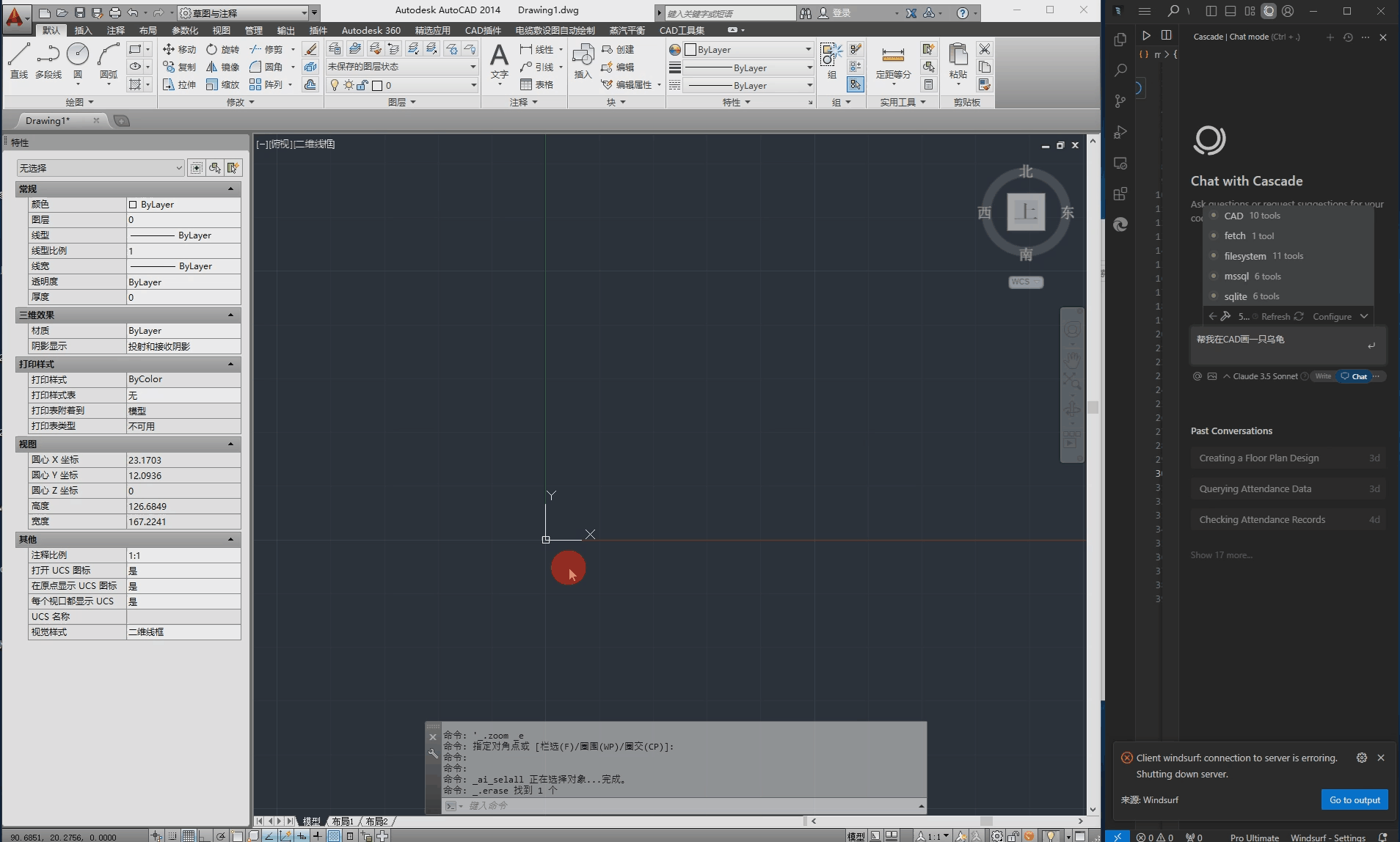

How to use it after deployment

Configuration in nextchat:

Fill in the domain name directly, do not add after the URL/v1/models , /v1/chat/completions

API_KEY is not configured in Vercel, then no input is required.

Configuration in immersive translation: (not recommended due to concurrency issues)

https://你的域名/v1/chat/completions

If apikey is not set, fill in the blanks.