Petals is an open source project developed by the BigScience Workshop to run Large Language Models (LLMs) through a distributed computing approach. Users can run and fine-tune LLMs such as Llama 3.1, Mixtral, Falcon, and BLOOM at home using consumer-grade GPUs or Google Colab.Petals uses a BitTorrent-like approach to distribute different parts of the model across multiple users' devices, enabling efficient inference and fine-tuning.

Function List

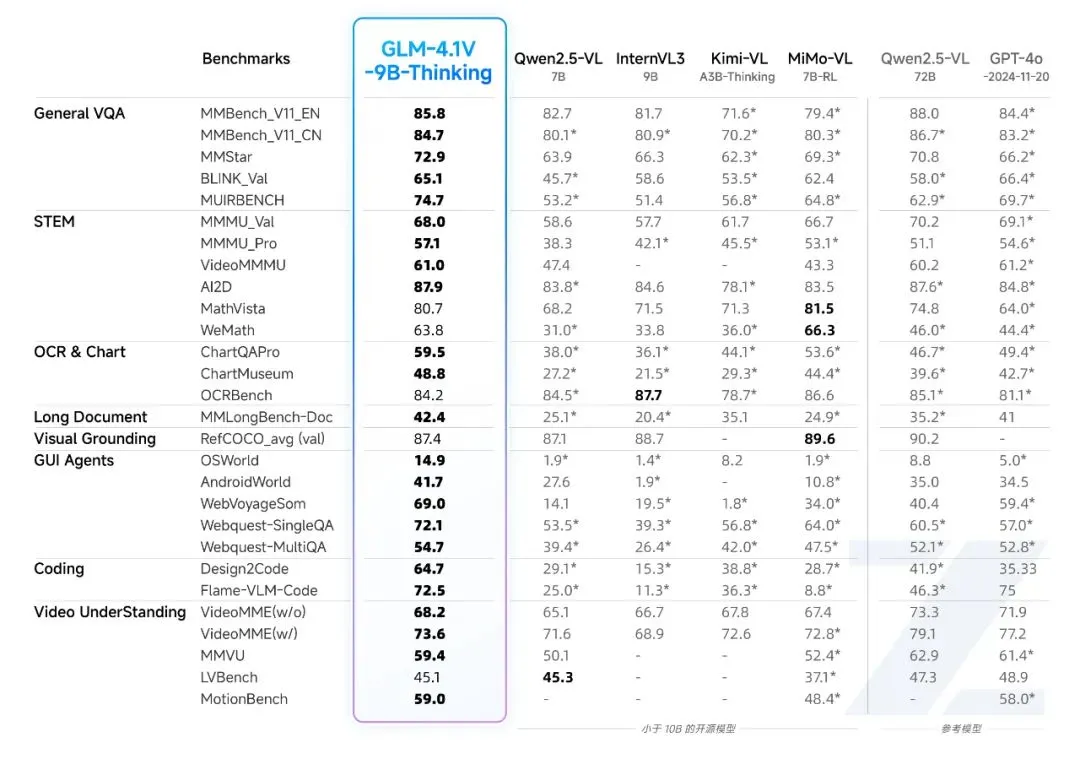

- Running Large Language Models: Llama 3.1 (up to 405B), Mixtral (8x22B), Falcon (40B+) and BLOOM (176B) models are supported.

- distributed inference: Run the model through a distributed network with single-batch inference speeds of up to 6 tokens/sec (Llama 2 70B) and 4 tokens/sec (Falcon 180B).

- Quick fine-tuning: Supports rapid user fine-tuning of models for a variety of tasks.

- community-driven: Relying on a community of users to share GPU resources, users can contribute their own GPUs to increase the computational power of Petals.

- Flexible API: Provides a flexible API similar to PyTorch and Transformers, with support for customizing paths and viewing hidden state.

- Privacy: Data processing takes place over a public network and users can set up private networks to protect sensitive data.

Using Help

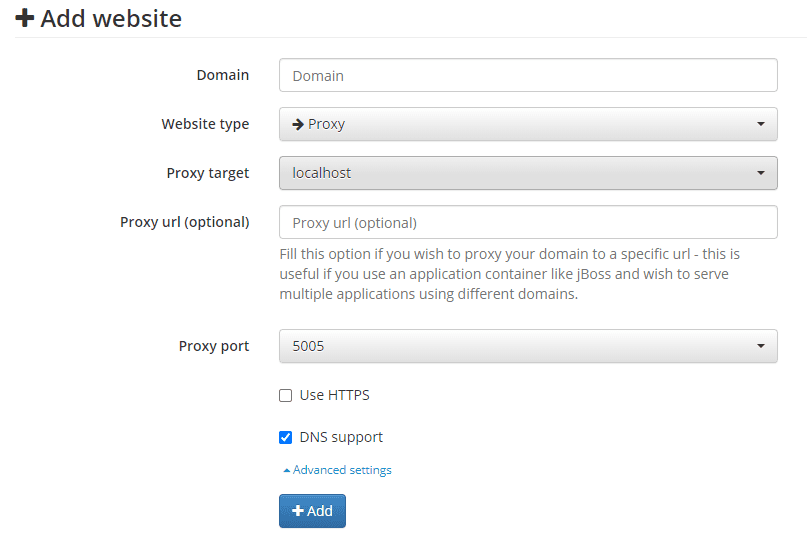

Installation and use

- Installation of dependencies::

- Linux + Anaconda::

conda install pytorch pytorch-cuda=11.7 -c pytorch -c nvidia pip install git+https://github.com/bigscience-workshop/petals python -m petals.cli.run_server meta-llama/Meta-Llama-3.1-405B-Instruct - Windows + WSL: Please refer to WikiThe

- Docker::

sudo docker run -p 31330:31330 --ipc host --gpus all --volume petals-cache:/cache --rm \ learningathome/petals:main \ python -m petals.cli.run_server --port 31330 meta-llama/Meta-Llama-3.1-405B-Instruct - macOS + Apple M1/M2 GPUs::

brew install python python3 -m pip install git+https://github.com/bigscience-workshop/petals python3 -m petals.cli.run_server meta-llama/Meta-Llama-3.1-405B-Instruct

- Linux + Anaconda::

- operational model::

- Select any of the available models, for example:

from transformers import AutoTokenizer from petals import AutoDistributedModelForCausalLM model_name = "meta-llama/Meta-Llama-3.1-405B-Instruct" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoDistributedModelForCausalLM.from_pretrained(model_name) inputs = tokenizer("A cat sat", return_tensors="pt")["input_ids"] outputs = model.generate(inputs, max_new_tokens=5) print(tokenizer.decode(outputs[0]))

- Select any of the available models, for example:

- Contribution GPU::

- Users can increase the computational power of Petals by connecting a GPU. Model HubThe

Main function operation flow

- Select Model: Access Petals website, select the desired model.

- Loading Models: Load and run the model according to the installation steps above.

- fine-tuned model: Use the API provided by Petals to fine-tune the model for a variety of tasks.

- Generate Text: Generate text over a distributed network for chatbots and interactive applications.