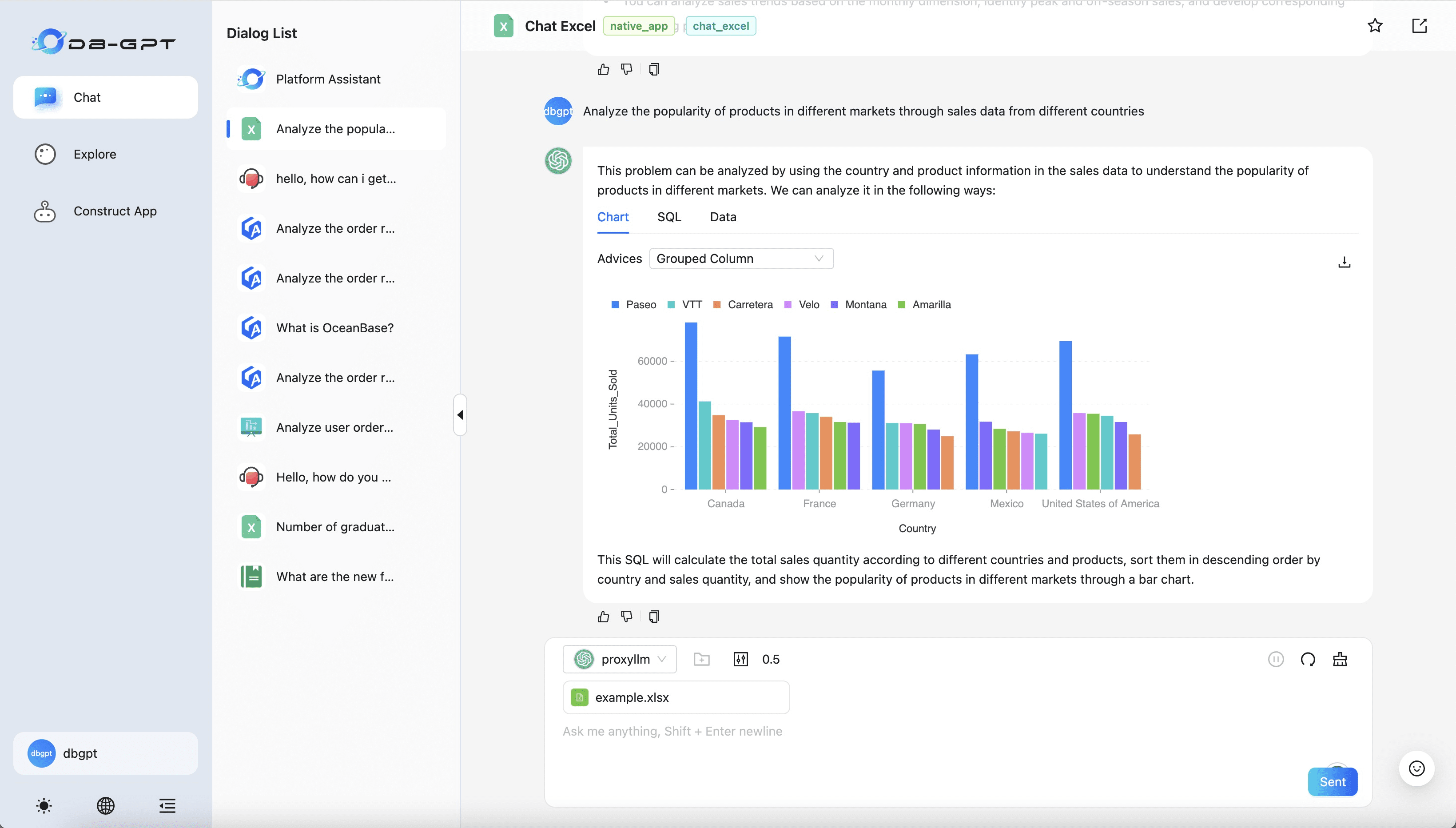

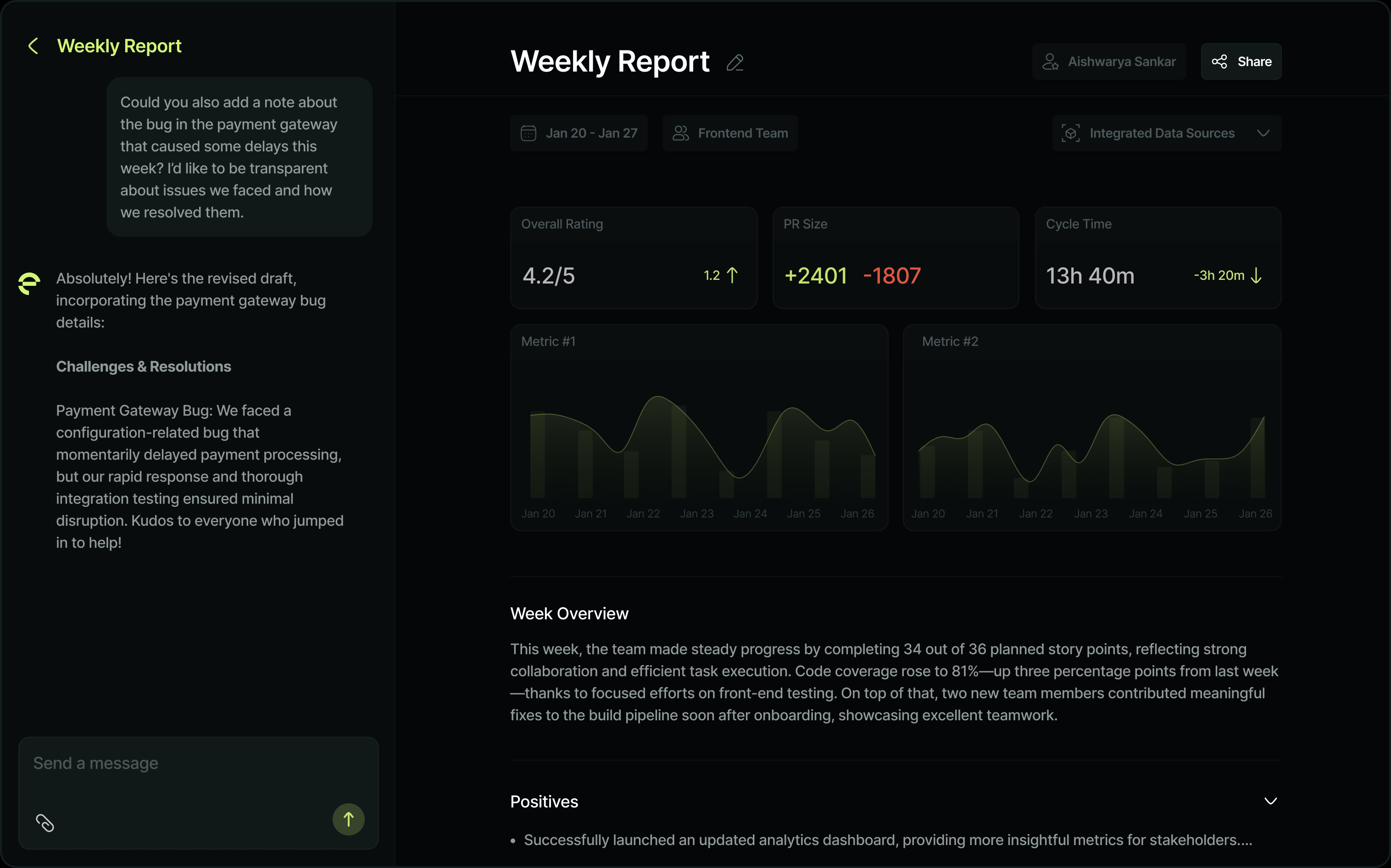

Free login GPT3.5 to API

https://github.com/missuo/FreeGPT35

https://github.com/aurora-develop/aurora

https://github.com/Dalufishe/freegptjs

https://github.com/PawanOsman/ChatGPT

https://github.com/nashsu/FreeAskInternet

https://github.com/aurorax-neo/free-gpt3.5-2api

https://github.com/aurora-develop/free-gpt3.5-2api

https://github.com/LanQian528/chat2api

https://github.com/k0baya/FreeGPT35-Glitch

https://github.com/cliouo/FreeGPT35-Vercel

https://github.com/hominsu/freegpt35

https://github.com/xsigoking/chatgpt-free-api

https://github.com/skzhengkai/free-chatgpt-api

https://github.com/aurora-develop/aurora-glitch (using glitch resources)

https://github.com/fatwang2/coze2openai (COZE to API, GPT4)

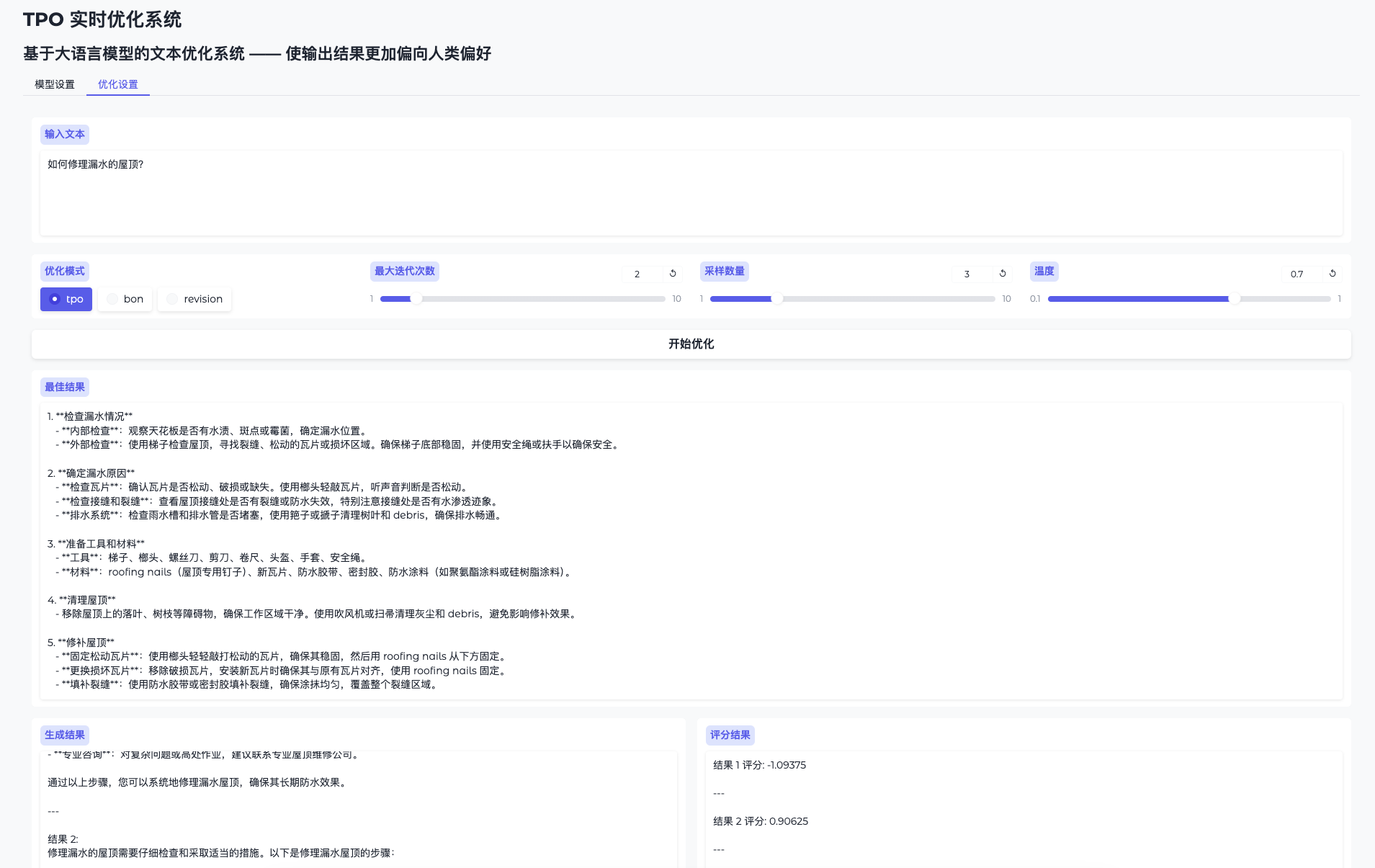

Domestic Model Reverse

search in depth(DeepSeek) Interface to API deepseek-free-api

Moonshot AI (Kimi.ai) Interface to API kimi-free-api

jumping stars (Leapfrog Ask StepChat) Interface to API step-free-api

Ali Tongyi (Qwen) Interface to API qwen-free-api

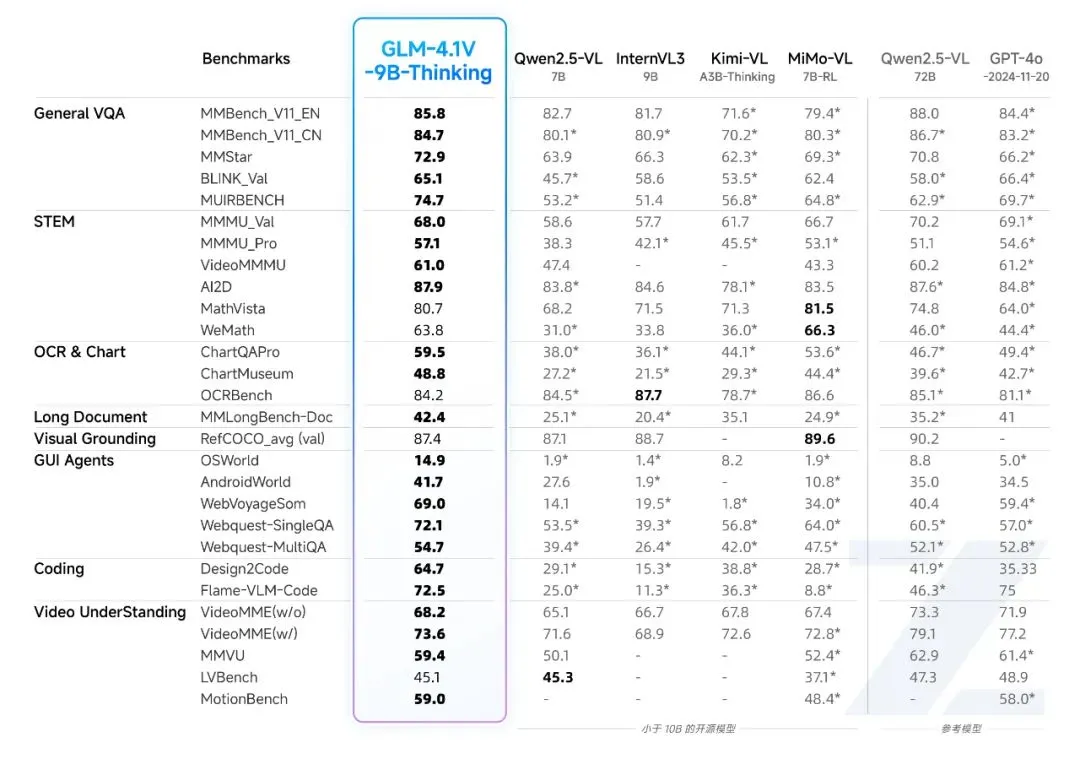

ZhipuAI (lit. record wisdom and say clearly) Interface to API glm-free-api

Meta AI (metaso) Interface to API metaso-free-api

Byte Jump (Beanbag) Interface to API doubao-free-api

Byte Jump (i.e. Dream AI) Interface to API jimeng-free-api

Spark Interface to API spark-free-api

MiniMax (Conch AI) Interface to API hailuo-free-api

Emohaa Interface to API emohaa-free-api

Login-free program with chat interface

https://github.com/Mylinde/FreeGPT

The work code for cloudflare, tie up your own domain name to play:

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request))

})async function handleRequest(request) {

// Ensure that the request is a POST request and that the path is correct

if (request.method === "POST" && new URL(request.url).pathname === "/v1/chat/completions") {

const url = 'https://multillm.ai-pro.org/api/openai-completion'; // target API address

const headers = new Headers(request.headers);// Add or modify headers as needed

headers.set('Content-Type', 'application/json');// Get the body of the request and parse the JSON

const requestBody = await request.json();

const stream = requestBody.stream; // get the stream argument// Construct a new request

const newRequest = new Request(url, {

method: 'POST',

headers: headers,

body: JSON.stringify(requestBody) // use modified body

});try {

// Send a request to the target API

const response = await fetch(newRequest);// Determine the response type based on the stream parameter

if (stream) {

// Handling streaming responses

const { readable, writable } = new TransformStream();

response.body.pipeTo(writable);

return new Response(readable, {

headers: response.headers

});

} else {

// Normal return response

return new Response(response.body, {

status: response.status,

headers: response.headers

});

}

} catch (e) {

// If the request fails, return an error message

return new Response(JSON.stringify({ error: 'Unable to reach the backend API' }), { status: 502 });

}

} else {

// Returns an error if the request method is not POST or the path is incorrect.

return new Response('Not found', { status: 404 });

}

}

POST Example:

curl -location 'https://ai-pro-free.aivvm.com/v1/chat/completions' \

-header 'Content-Type: application/json' \

-data '{

"model": "gpt-4-turbo".

"messages": [

{

"role": "user", "content": "Why Lu Xun hit Zhou Shuren "

}],

"stream": true

}’

Add a pseudo-streaming code (output will be slower):

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request))

})async function handleRequest(request) {

if (request.method === "OPTIONS") {

return new Response(null, {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}, status: 204

})

}

// Ensure that the request is a POST request and that the path is correct

if (request.method === "POST" && new URL(request.url).pathname === "/v1/chat/completions") {

const url = 'https://multillm.ai-pro.org/api/openai-completion'; // target API address

const headers = new Headers(request.headers);// Add or modify headers as needed

headers.set('Content-Type', 'application/json');// Get the body of the request and parse the JSON

const requestBody = await request.json();

const stream = requestBody.stream; // get the stream argument// Construct a new request

const newRequest = new Request(url, {

method: 'POST',

headers: headers,

body: JSON.stringify(requestBody) // use modified body

});try {

// Send a request to the target API

const response = await fetch(newRequest);// Determine the response type based on the stream parameter

if (stream) {

const originalJson = await response.json(); // read the full data at once

// Create a readable stream

const readableStream = new ReadableStream({

start(controller) {

// Send start data

const startData = createDataChunk(originalJson, "start");

controller.enqueue(new TextEncoder().encode('data: ' + JSON.stringify(startData) + '\n\n ')).// Assuming multiple chunks of data are processed and sent according to originalJson.

// For example, to simulate sending data in batches

const content = originalJson.choices[0].message.content; // assume this is the content to send

const newData = createDataChunk(originalJson, "data", content);

controller.enqueue(new TextEncoder().encode('data: ' + JSON.stringify(newData) + '\n\n') ).// Send end data

const endData = createDataChunk(originalJson, "end");

controller.enqueue(new TextEncoder().encode('data: ' + JSON.stringify(endData) + '\n\n') ).controller.enqueue(new TextEncoder().encode('data: [DONE]'));

// Mark the end of the stream

controller.close();

}

});

return new Response(readableStream, {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

'Content-Type': 'text/event-stream',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive'

}

});

} else {

// Normal return response

return new Response(response.body, {

status: response.status,

headers: response.headers

});

}

} catch (e) {

// If the request fails, return an error message

return new Response(JSON.stringify({ error: 'Unable to reach the backend API' }), { status: 502 });

}

} else {

// Returns an error if the request method is not POST or the path is incorrect.

return new Response('Not found', { status: 404 });

}

}// Create different data blocks based on type

function createDataChunk(json, type, content = {}) {

switch (type) {

case "start".

return {

id: json.id,

object: "chat.completion.chunk",

created: json.created,

model: json.model,

choices: [{ delta: {}, index: 0, finish_reason: null }]

};

case "data".

return {

id: json.id,

object: "chat.completion.chunk",

created: json.created,

model: json.model,

choices: [{ delta: { content }, index: 0, finish_reason: null }]

};

case "end".

return {

id: json.id,

object: "chat.completion.chunk",

created: json.created,

model: json.model,

choices: [{ delta: {}, index: 0, finish_reason: 'stop' }]

};

default.

return {};

}

}