General Introduction

LiteLLM is a Python SDK and proxy server developed by BerriAI to simplify and unify the invocation and management of multiple Large Language Model (LLM) APIs. It supports more than 100 large model APIs, including OpenAI, HuggingFace, Azure, etc., and unifies them into OpenAI format, which makes it easy for developers to switch and manage between different AI services. It also provides a stable Docker image and detailed migration guide.LiteLLM allows users to call more than 100 LLM APIs in OpenAI format via proxy server and Python SDK, which greatly improves development efficiency and flexibility.

-

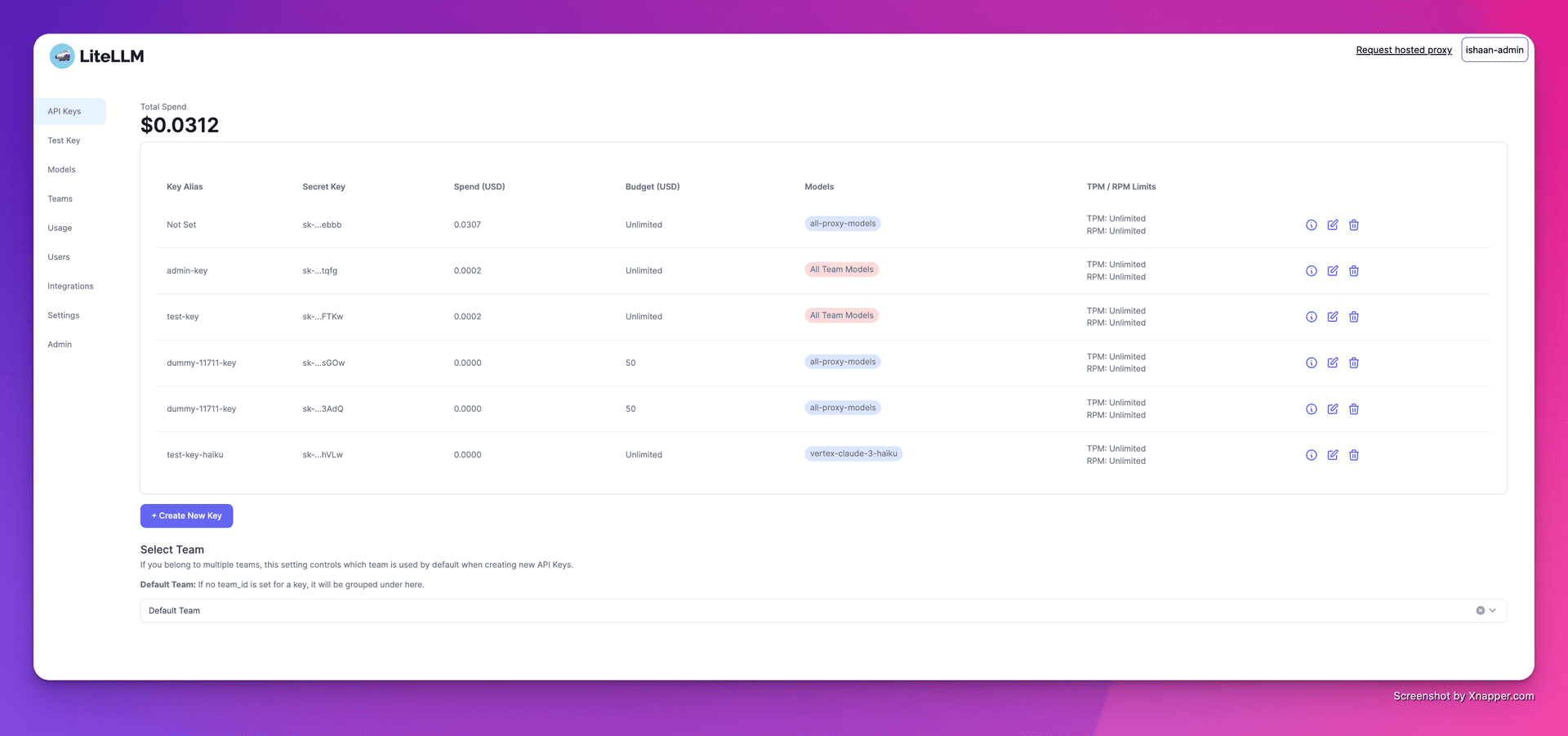

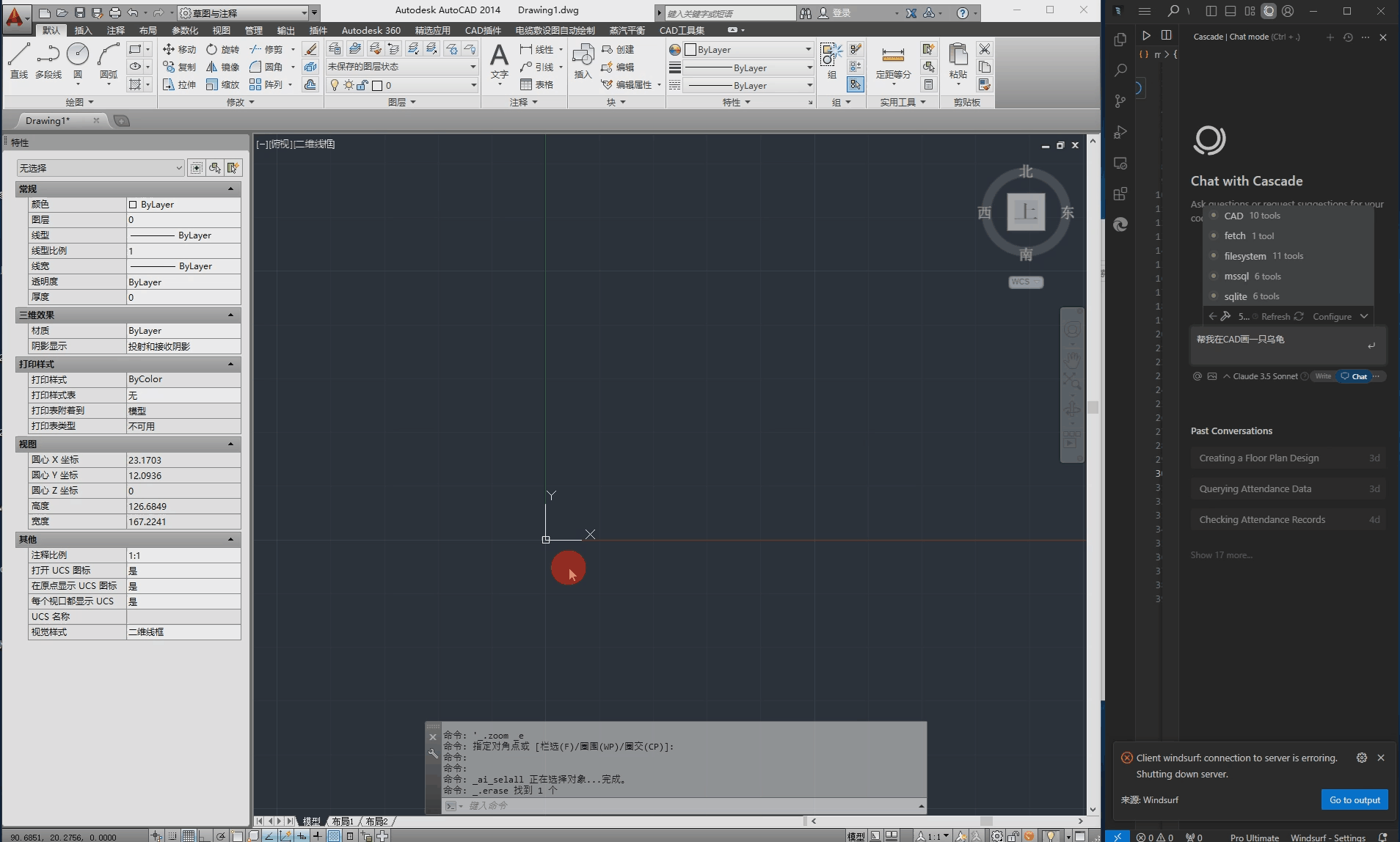

1. Creating keys

-

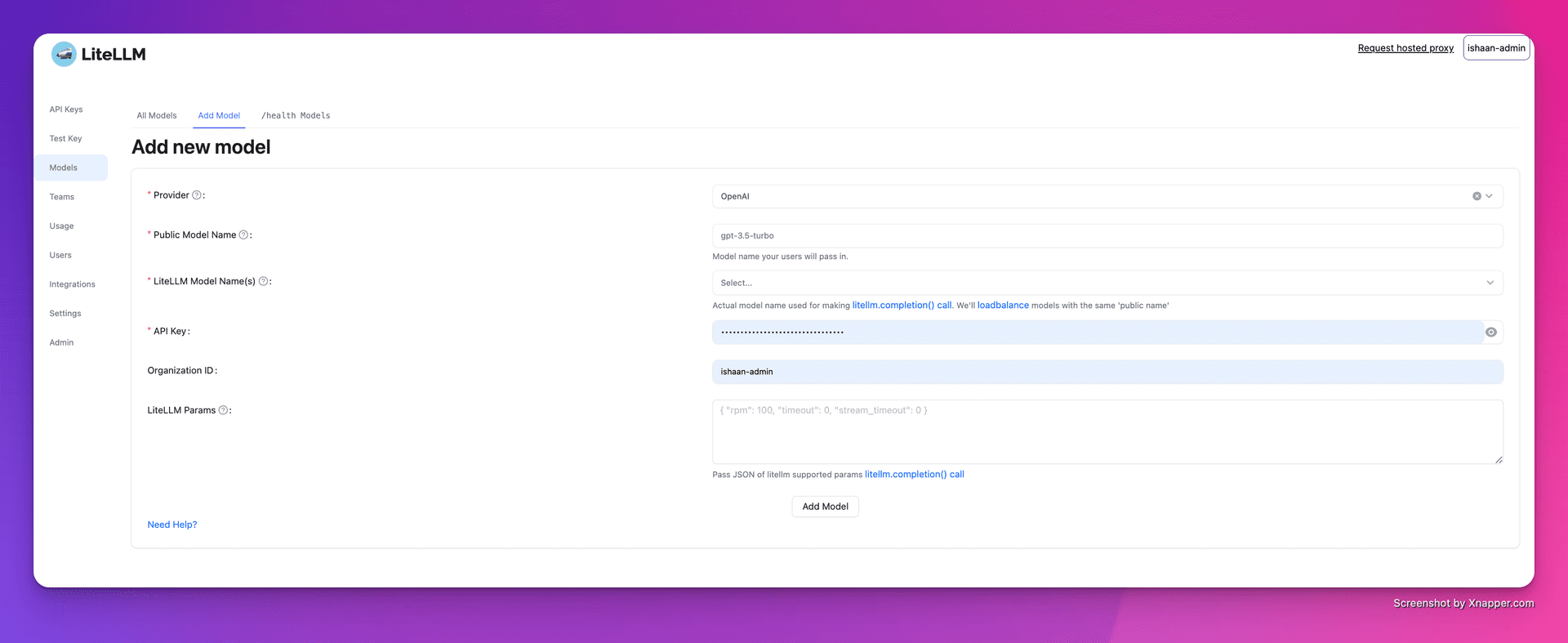

2. Adding models

-

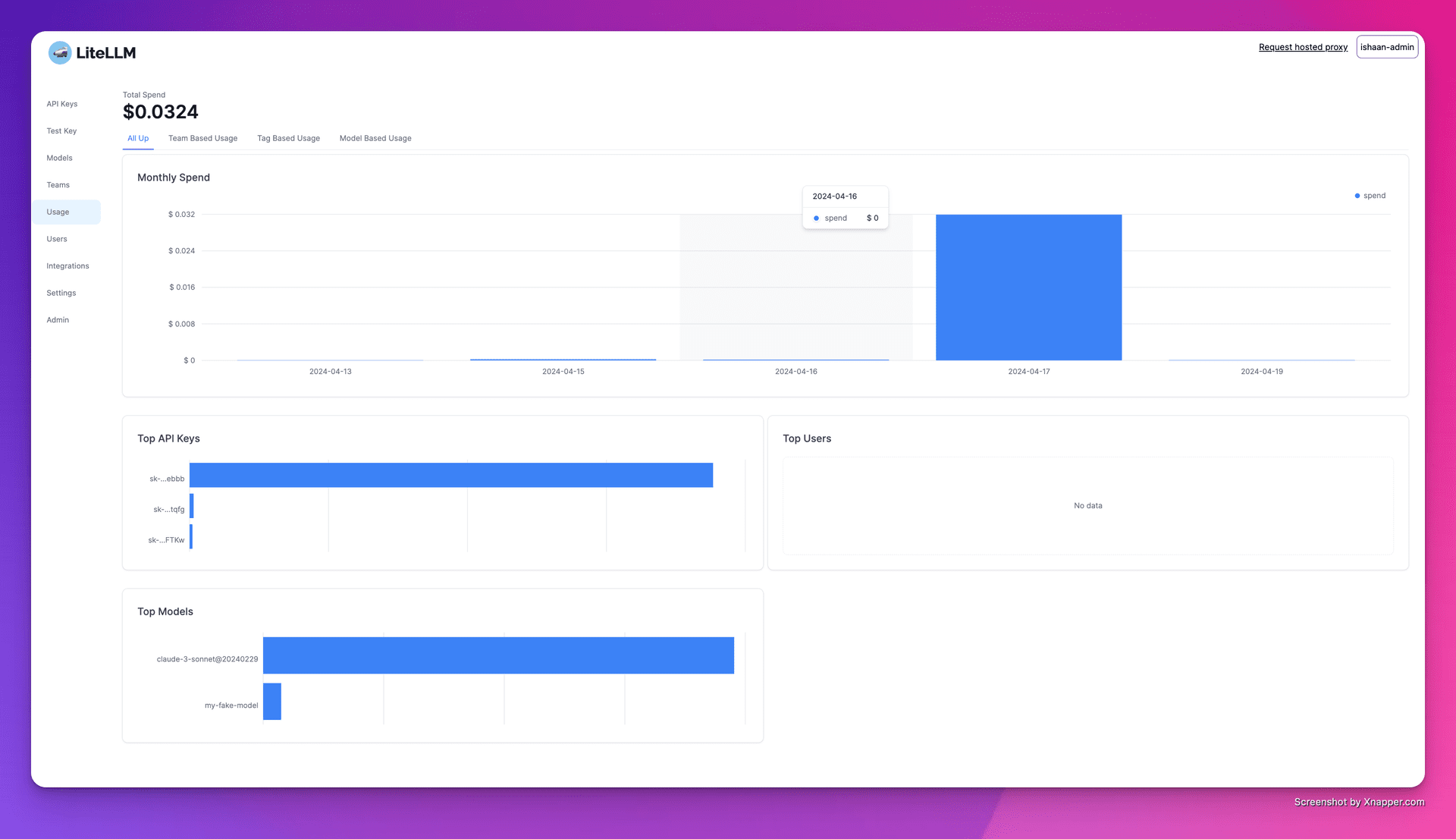

3. Tracking expenditures

-

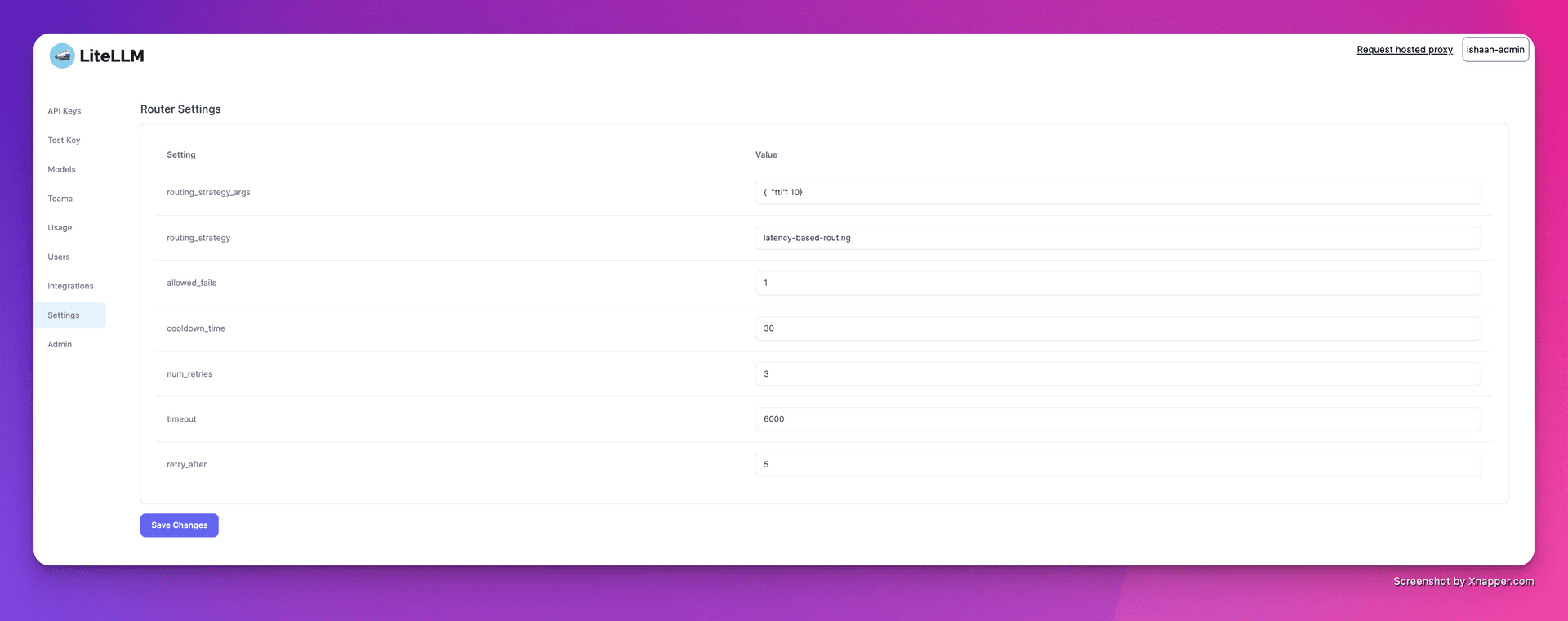

4. Configure load balancing

Function List

- Multi-platform support: Supports multiple LLM providers such as OpenAI, Cohere, Anthropic, and more. Supports more than 100 big model API calls.

- stable version: Provides stable Docker images that have been load-tested for 12 hours. Supports setting budget and request frequency limits.

- proxy server: Unified invocation of multiple LLM APIs through a proxy server, and unified conversion of API format to OpenAI format.

- Python SDK: A Python SDK is provided to simplify the development process.

- Streaming Response: Support for streaming return model responses to enhance the user experience.

- callback function: Supports multiple callbacks for easy logging and monitoring.

Using Help

Installation and Setup

- Installing Docker: Ensure that Docker is installed on your system.

- Pulling Mirrors: Use

docker pullcommand pulls a stable image of LiteLLM. - Starting a proxy server::

cd litellm echo 'LITELLM_MASTER_KEY="sk-1234"' > .env echo 'LITELLM_SALT_KEY="sk-1234"' > .env source .env poetry run pytest . - Configuring the Client: Set the proxy server address and API key in the code.

import openai client = openai.OpenAI(api_key="your_api_key", base_url="http://0.0.0.0:4000") response = client.chat.completions.create(model="gpt-3.5-turbo", messages=[{"role": "user", "content": "Hello, how are you?"}]) print(response)

Usage Functions

- invocation model: By

model=<provider_name>/<model_name>Calling models from different providers. - Streaming Response: Settings

stream=TrueGet the streaming response.response = await acompletion(model="gpt-3.5-turbo", messages=messages, stream=True) for part in response: print(part.choices.delta.content or "") - Setting Callbacks: Configure callback functions to log inputs and outputs.

litellm.success_callback = ["lunary", "langfuse", "athina", "helicone"]