General Introduction

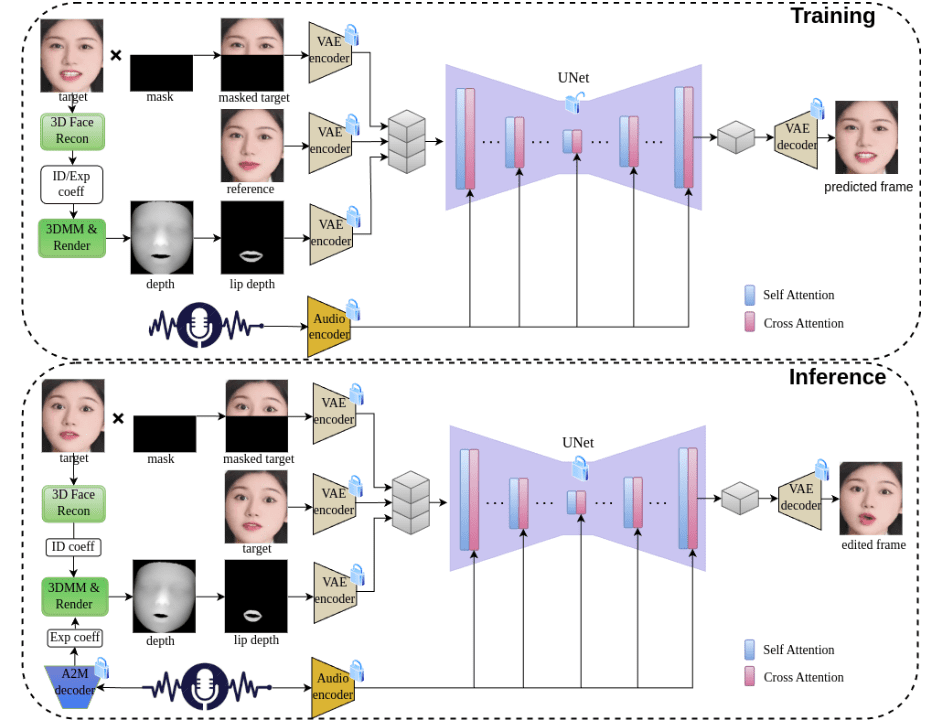

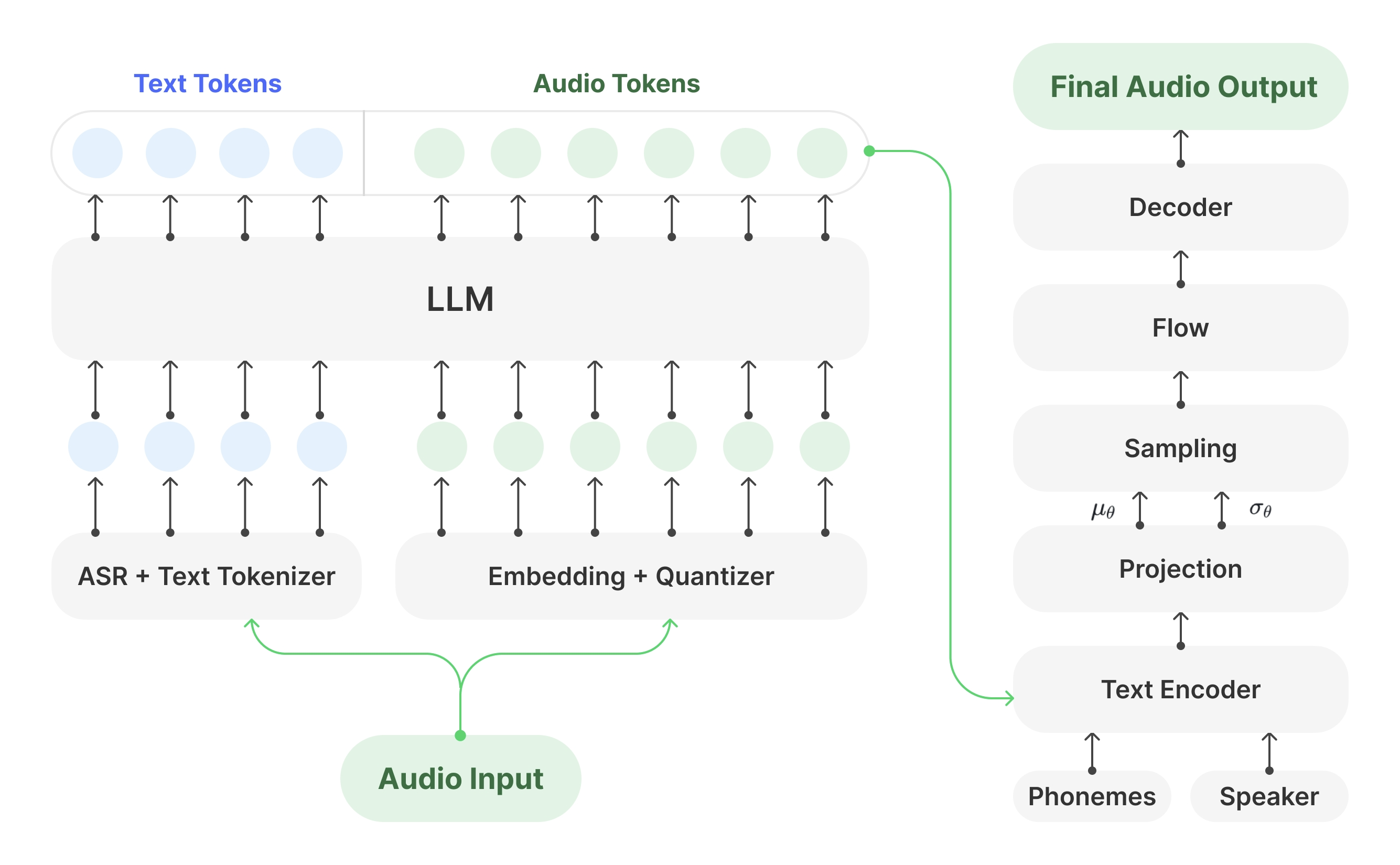

JoyGen is an innovative two-stage talking face video generation framework focused on solving the problem of audio-driven facial expression generation. Developed by a team from Jingdong Technology, the project employs advanced 3D reconstruction techniques and audio feature extraction methods to accurately capture the identity features and expression coefficients of the speaker for high-quality lip-synchronization and visual synthesis.The JoyGen framework consists of two main phases: firstly, audio-based lip-motion generation, and secondly, visual appearance synthesis. By integrating audio features and facial depth maps, it provides comprehensive supervision for accurate lip-synchronization. The project not only supports Chinese and English audio drivers, but also provides a complete training and inference pipeline, making it a powerful open source tool.

Function List

- Audio-driven 3D facial expression generation and editing

- Precise lip-sync-audio technology

- Supports Chinese and English audio input

- 3D depth-aware visual synthesis

- Facial identity retention function

- High-quality video generation and editing capabilities

- Complete training and reasoning framework support

- Pre-trained models support rapid deployment

- Support for customized dataset training

- Provide detailed data preprocessing tools

Using Help

1. Environmental configuration

1.1 Basic environmental requirements

- Supported GPUs: V100, A800

- Python version: 3.8.19

- System dependencies: ffmpeg

1.2 Installation steps

- Create and activate the conda environment:

conda create -n joygen python=3.8.19 ffmpeg

conda activate joygen

pip install -r requirements.txt

- Install the Nvdiffrast library:

git clone https://github.com/NVlabs/nvdiffrast

cd nvdiffrast

pip install .

- Download pre-trained model

From the provideddownload linkGet the pre-trained model and place it in the specified directory structure in the./pretrained_models/Catalog.

2. Utilization process

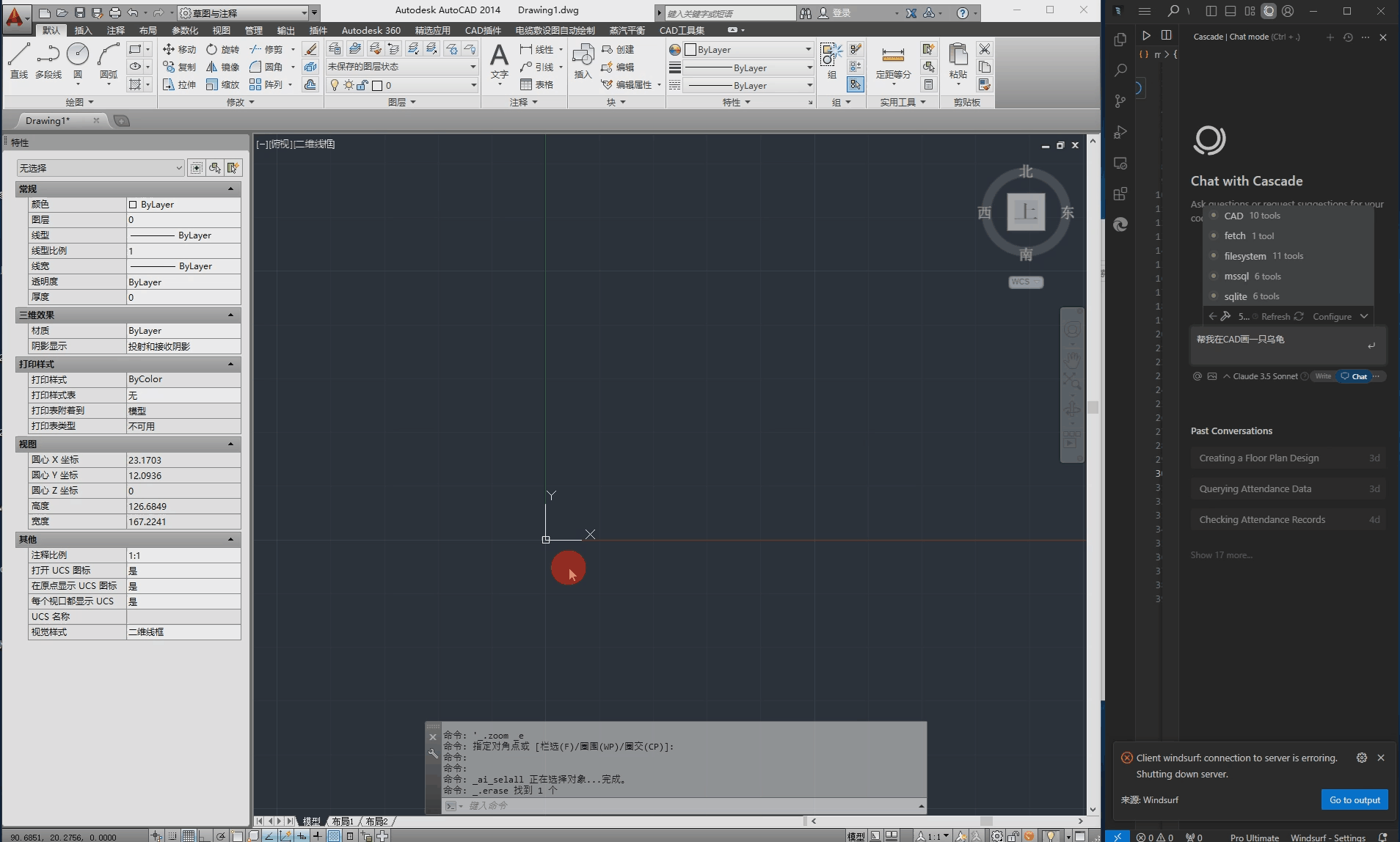

2.1 Reasoning process

Execute the full inference pipeline:

bash scripts/inference_pipeline.sh 音频文件 视频文件 结果目录

Execute the reasoning process in steps:

- Extracting facial expression coefficients from audio:

python inference_audio2motion.py --a2m_ckpt ./pretrained_models/audio2motion/240210_real3dportrait_orig/audio2secc_vae --hubert_path ./pretrained_models/audio2motion/hubert --drv_aud ./demo/xinwen_5s.mp3 --seed 0 --result_dir ./results/a2m --exp_file xinwen_5s.npy

- Renders depth maps frame-by-frame using new expression coefficients:

python -u inference_edit_expression.py --name face_recon_feat0.2_augment --epoch=20 --use_opengl False --checkpoints_dir ./pretrained_models --bfm_folder ./pretrained_models/BFM --infer_video_path ./demo/example_5s.mp4 --infer_exp_coeff_path ./results/a2m/xinwen_5s.npy --infer_result_dir ./results/edit_expression

- Generating facial animations based on audio features and facial depth maps:

CUDA_VISIBLE_DEIVCES=0 python -u inference_joygen.py --unet_model_path pretrained_models/joygen --vae_model_path pretrained_models/sd-vae-ft-mse --intermediate_dir ./results/edit_expression --audio_path demo/xinwen_5s.mp3 --video_path demo/example_5s.mp4 --enable_pose_driven --result_dir results/talk --img_size 256 --gpu_id 0

2.2 Training process

- Data preprocessing:

python -u preprocess_dataset.py --checkpoints_dir ./pretrained_models --name face_recon_feat0.2_augment --epoch=20 --use_opengl False --bfm_folder ./pretrained_models/BFM --video_dir ./demo --result_dir ./results/preprocessed_dataset

- Examine preprocessed data and generate training lists:

python -u preprocess_dataset_extra.py data_dir

- Start training:

Modify the config.yaml file and execute it:

accelerate launch --main_process_port 29501 --config_file config/accelerate_config.yaml train_joygen.py