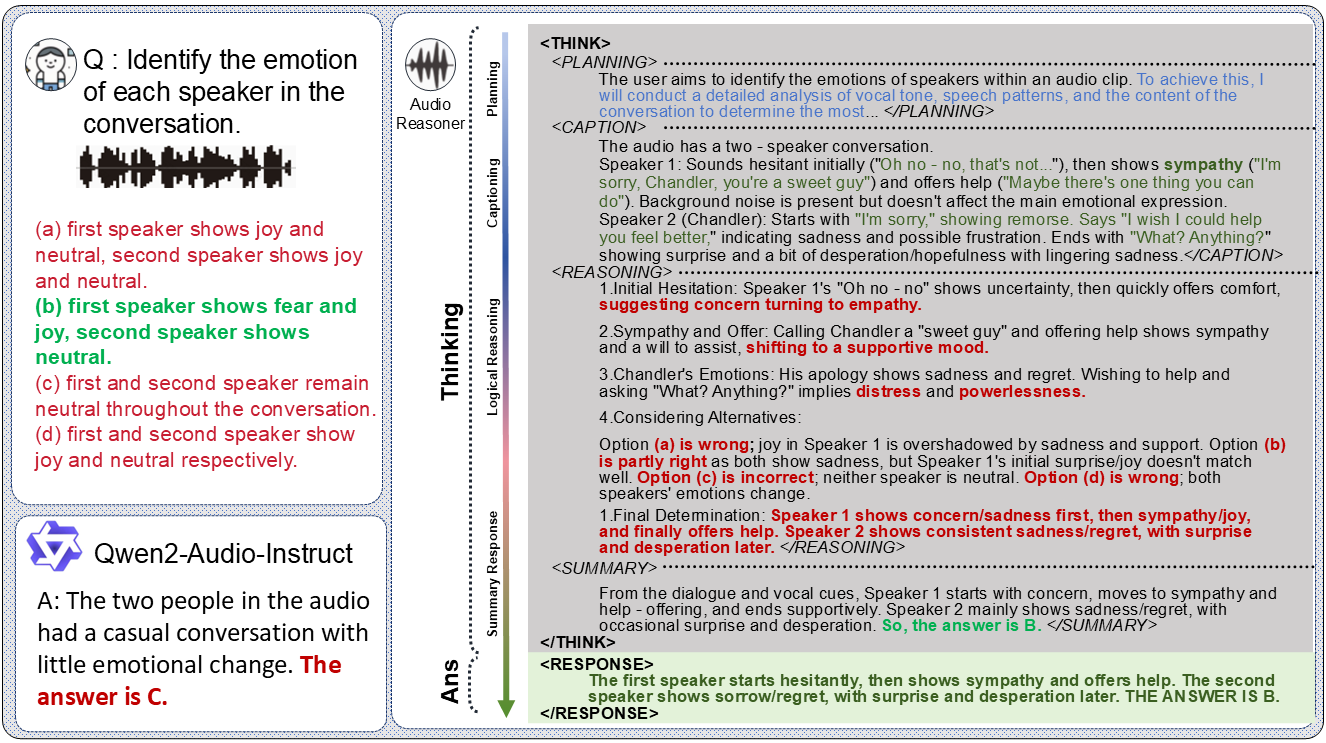

Gaze-LLE is a gaze target prediction tool based on a large-scale learning encoder. Developed by Fiona Ryan, Ajay Bati, Sangmin Lee, Daniel Bolya, Judy Hoffman, and James M. Rehg, the project aims to enable efficient gaze target prediction with pre-trained visual base models such as DINOv2.The architecture of Gaze-LLE is concise and only freezes the pre-trained visual coder to learn a lightweight gaze decoder, which reduces the amount of parameters by 1-2 orders of magnitude compared to previous work, and does not require additional input modalities such as depth and pose information.

Function List

- Focus on target forecasting: Efficient prediction of gaze targets based on pre-trained visual coders.

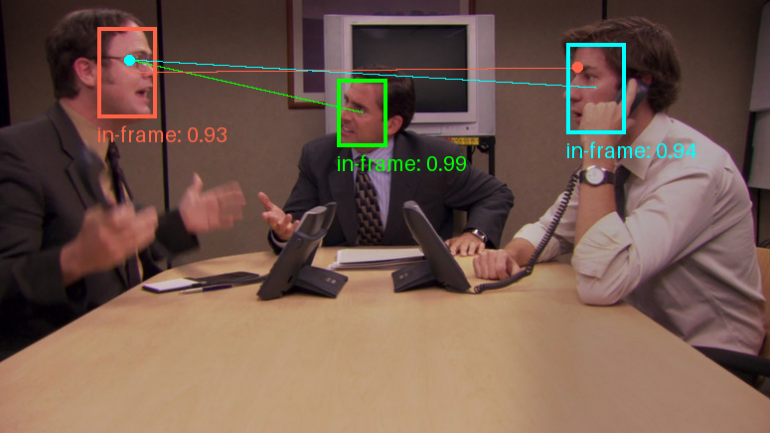

- Multi-gaze prediction: Supports gaze prediction for multiple individuals in a single image.

- Pre-trained models: Provides a variety of pre-trained models to support different backbone networks and training data.

- Lightweight Architecture: Learning lightweight gaze decoders only on frozen pre-trained visual coders.

- No additional input modes: No additional depth and attitude information inputs are required.

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/fkryan/gazelle.git

cd gazelle

- Create a virtual environment and install dependencies:

conda env create -f environment.yml

conda activate gazelle

pip install -e .

- Optional: Install xformers to accelerate attention calculations (if supported by the system):

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu118

Using pre-trained models

Gaze-LLE provides a variety of pre-trained models that users can download and use as needed:

- gazelledinov2vitb14: Model based on DINOv2 ViT-B with training data from GazeFollow.

- gazelledinov2vitl14: A model based on DINOv2 ViT-L with training data from GazeFollow.

- gazelledinov2vitb14_inout: A model based on DINOv2 ViT-B with training data for GazeFollow and VideoAttentionTarget.

- gazellelargevitl14_inout: A model based on DINOv2 ViT-L with training data for GazeFollow and VideoAttentionTarget.

usage example

- Load the model in PyTorch Hub:

import torch

model, transform = torch.hub.load('fkryan/gazelle', 'gazelle_dinov2_vitb14')

- Check out the demo notebook in Google Colab to learn how to detect the target of everyone's gaze in an image.

watch for forecasts

Gaze-LLE supports gaze prediction for multiple people, i.e., a single image is encoded once and then features are used to predict the gaze targets of multiple people in the image. The model outputs a spatial heat map representing the probability of the location of the gaze target in the scene with values ranging from [0,1], where 1 represents the highest probability of the gaze target location.