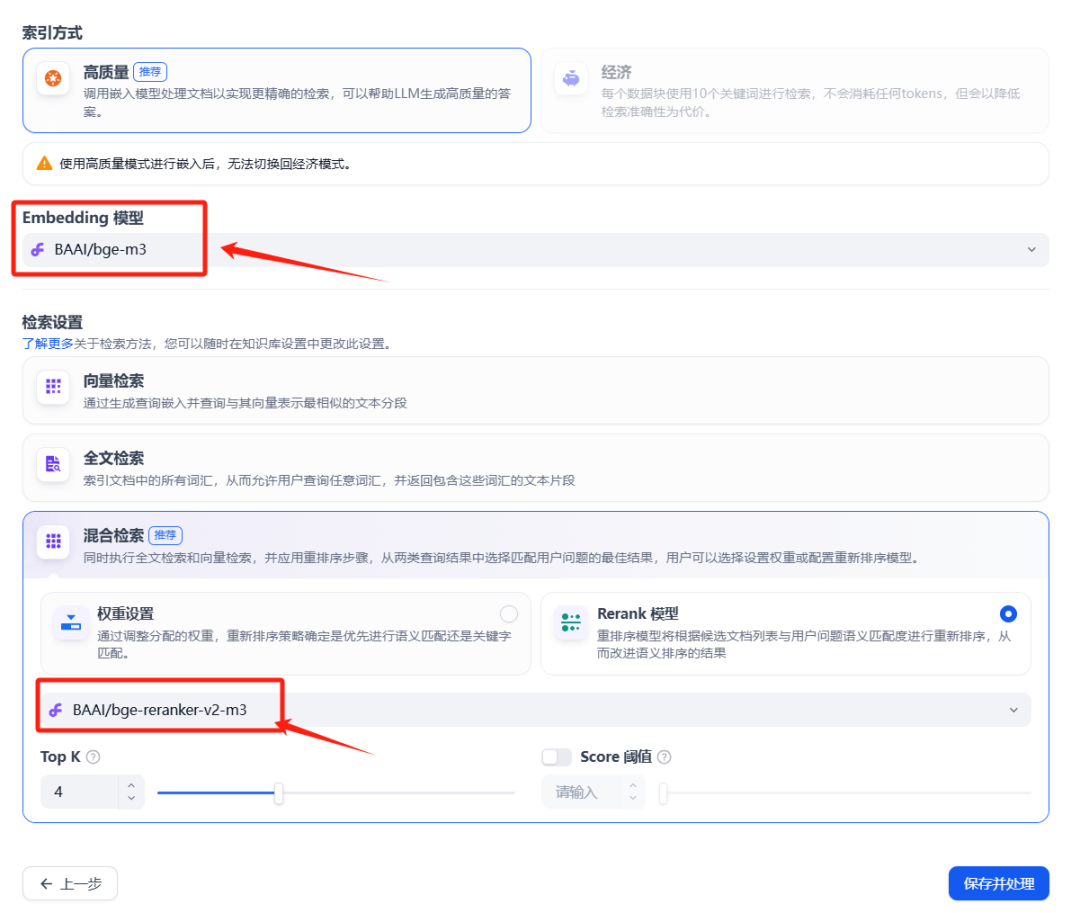

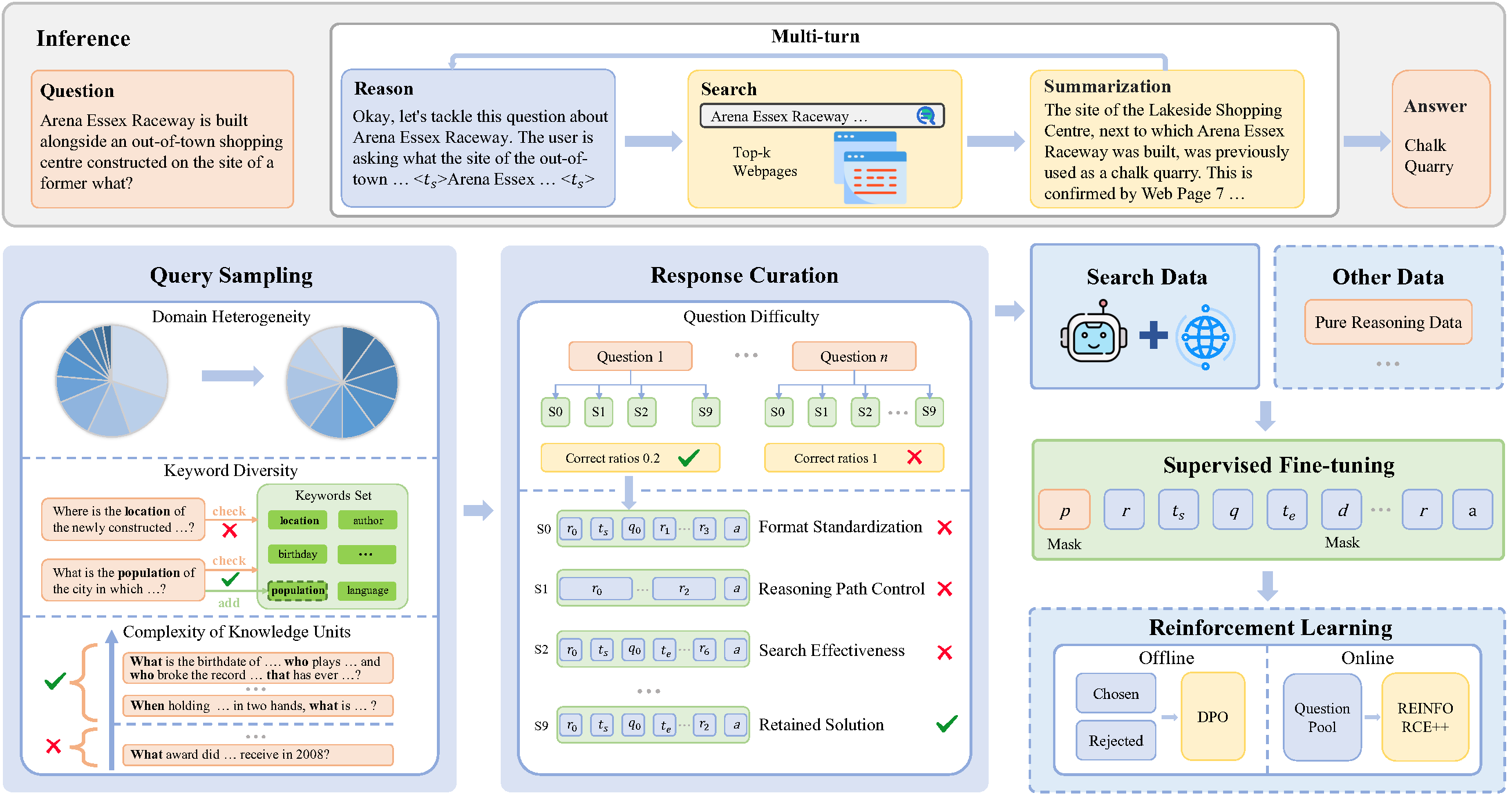

SimpleDeepSearcher is an open source framework designed to enhance the capabilities of Large Language Models (LLMs) for complex information retrieval tasks. It generates high-quality inference and search traces by simulating real web search behavior, helping models to be trained efficiently without the need for large amounts of data. Compared to traditional Retrieval Augmented Generation (RAG) or Reinforcement Learning (RL) methods, SimpleDeepSearcher uses a small amount of selected data to enable the model to autonomously perform complex reasoning and search tasks through knowledge distillation and self-distillation techniques. Developed by the RUCAIBox team and released under the MIT license, the project is intended for researchers and developers to optimize the search capabilities of large language models. The official documentation and code is hosted on GitHub and was last updated in April 2025.

Function List

- Web Search Simulation: simulate the search behavior of real users in an open web environment, generating multiple rounds of inference and search trajectories.

- Data synthesis and screening: Generate high-quality training data through diversity query sampling and multidimensional response filtering.

- Efficient monitoring of fine-tuning: Supervised fine-tuning (SFT) can be accomplished with only a small amount of selected data, reducing computational costs.

- Compatible with multiple models: Supports the existing underlying large language model and dialog model without additional fine-tuning of cold-start commands.

- Open Source Code and Documentation: Complete training code, inference code, and model checkpoints are provided for developers' ease of use and research.

Using Help

Installation process

SimpleDeepSearcher is a Python based open source project , the runtime environment requires Python 3.10 or above. The following are detailed installation steps:

- Cloning Project Warehouse

Clone the SimpleDeepSearcher repository locally by running the following command in a terminal:git clone https://github.com/RUCAIBox/SimpleDeepSearcher.git cd SimpleDeepSearcher - Creating a Virtual Environment

Use conda to create and activate a virtual environment and ensure dependency isolation:conda create --name simpledeepsearcher python=3.10 conda activate simpledeepsearcher - Installation of dependencies

Install the core dependency libraries required by the project, such as vLLM, deepspeed, and datasets. run the following command:pip install vllm==0.6.5 pip install packaging ninja flash-attn --no-build-isolation pip install deepspeed accelerate datasets - Configuring the Search API

SimpleDeepSearcher uses the Google Search API for online search. Users need to get the subscription key and endpoint URL for Google Search and configure it when running the script. Example:export GOOGLE_SUBSCRIPTION_KEY="YOUR_KEY" export GOOGLE_ENDPOINT="https://google.serper.dev/search" - Preparing the model path

The user needs to specify the paths to the inference model and the summary model. Example:export MODEL_PATH="/path/to/your/reasoning_model" export SUMMARIZATION_MODEL_PATH="/path/to/your/summarization_model" - Running inference scripts

After completing the configuration, run the inference script for testing or deployment:export CUDA_VISIBLE_DEVICES=0,1 python -u inference/inference.py \ --dataset_name YOUR_DATASET_NAME \ --cache_dir_base cache \ --output_dir_base output \ --model_path "$MODEL_PATH" \ --summarization_model_path "$SUMMARIZATION_MODEL_PATH" \ --summarization_model_url YOUR_SUMMARIZATION_MODEL_URL \ --google_subscription_key "$GOOGLE_SUBSCRIPTION_KEY" \ --google_endpoint "$GOOGLE_ENDPOINT" > output/output.log 2>&1

Main Functions

The core functionality of SimpleDeepSearcher is to enhance the inference of large language models through web search. Below is a detailed flow of how the main features work:

- Data synthesis and search track generation

SimpleDeepSearcher generates multiple rounds of inference trajectories by simulating user search behavior in a real web environment. Users can configuredata_synthesismodule, specifying search terms and question types. The system automatically samples diverse questions from open-domain QA resources and obtains relevant web content through the Google search API. The generated data, including questions, search terms, web page results, and inference paths, are saved in thecachefolder.

Operational Steps:- compiler

data_synthesis_config.json, set query sampling parameters (e.g., domain diversity, keyword complexity). - (of a computer) run

python data_synthesis.pyGenerate initial data. - probe

cache/synthesis_datacatalog to ensure complete data generation.

- compiler

- Data filtering and optimization

The program provides multi-dimensional response screening to ensure the quality of training data. Users can access the program via theresponse_curation.pyScripts that filter data based on criteria such as problem difficulty, inference path length, and search effectiveness.

Operational Steps:- Run the following command to start screening:

python response_curation.py --input_dir cache/synthesis_data --output_dir cache/curated_data - The filtered data is saved in the

cache/curated_datain which only high-quality training samples are retained.

- Run the following command to start screening:

- Model supervised fine-tuning

SimpleDeepSearcher uses supervised fine-tuning (SFT) to optimize large language models. Users need to prepare a base model (e.g., QWEN2.5-32B) and use the filtered data for fine-tuning.

Operational Steps:- configure

sft_config.json, specify the model path and training parameters (e.g., learning rate, batch size). - Run the following command to initiate fine tuning:

python sft_train.py --config sft_config.json - After training is complete, the model checkpoints are saved in the

output/checkpointsCatalog.

- configure

- Reasoning and Testing

Users can test the search and inference capabilities of the model through inference scripts. The inference results are output to theoutput/resultsCatalog with generated answers and reasoning paths.

Operational Steps:- Configure inference parameters (e.g., dataset name, output directory).

- Run the inference script (refer to the commands in the installation process).

- ferret out

output/output.logCheck the results of the reasoning.

Featured Function Operation

- Diversity query sampling: SimpleDeepSearcher uses a diversity query sampling strategy to select problems based on domain heterogeneity, keyword diversity and knowledge unit complexity. Users can find a list of queries in the

query_sampling_config.jsonin which the sampling parameters are adjusted to ensure that the generated questions cover a wide range of domains and difficulty levels. - Knowledge Distillation and Self-Distillation: The project generates high-quality training data using powerful inference models through knowledge distillation techniques. Users can specify a powerful pre-trained model (e.g., LLaMA or GPT family) as the teacher model, run the

distillation.pyscript for data generation. - Real-time web search: SimpleDeepSearcher supports real-time web search and combines with Google search API to get the latest information dynamically. Users need to make sure the API key is valid and check the network connection.

caveat

- Ensure that the network environment is stable to support real-time web searches.

- Check the model path and API key configuration to avoid runtime errors.

- Dependency library versions are regularly updated to ensure compatibility.

- The project code and documentation follow the MIT license, and users are required to cite the source (e.g., thesis).

@article{sun2025simpledeepsearcher}).

application scenario

- academic research

SimpleDeepSearcher helps researchers optimize the performance of large language models in information retrieval tasks. For example, in paper retrieval or data analysis, the model can quickly access relevant literature or datasets through web searches to improve research efficiency. - Q&A System Development

Developers can use SimpleDeepSearcher to build intelligent Q&A systems. The system simulates user search behavior and generates accurate answers, making it suitable for customer service bots or educational platforms. - Complex problem reasoning

For problems that require multi-step reasoning (e.g., math or logic problems), SimpleDeepSearcher helps models provide more accurate answers by generating reasoning trajectories, suitable for online education or competition platforms.

QA

- What prerequisites does SimpleDeepSearcher require?

Users will need a Python 3.10+ environment, a Google search API key, and a pre-trained large language model. Make sure to install all dependent libraries and configure the correct path to the model. - How do you ensure the quality of the data generated?

The project provides multi-dimensional response filtering, and users can adjust the filtering parameters (e.g., question difficulty, inference path length) to retain high-quality data. - Are other search APIs supported?

The current version mainly supports Google search API, users can modify the code to adapt to other search services, but need to adjust the API call logic. - How long does the training take?

Training time depends on the model size and amount of data. Fine-tuning a QWEN2.5-32B model with 871 high-quality samples typically takes several hours (GPU environment).