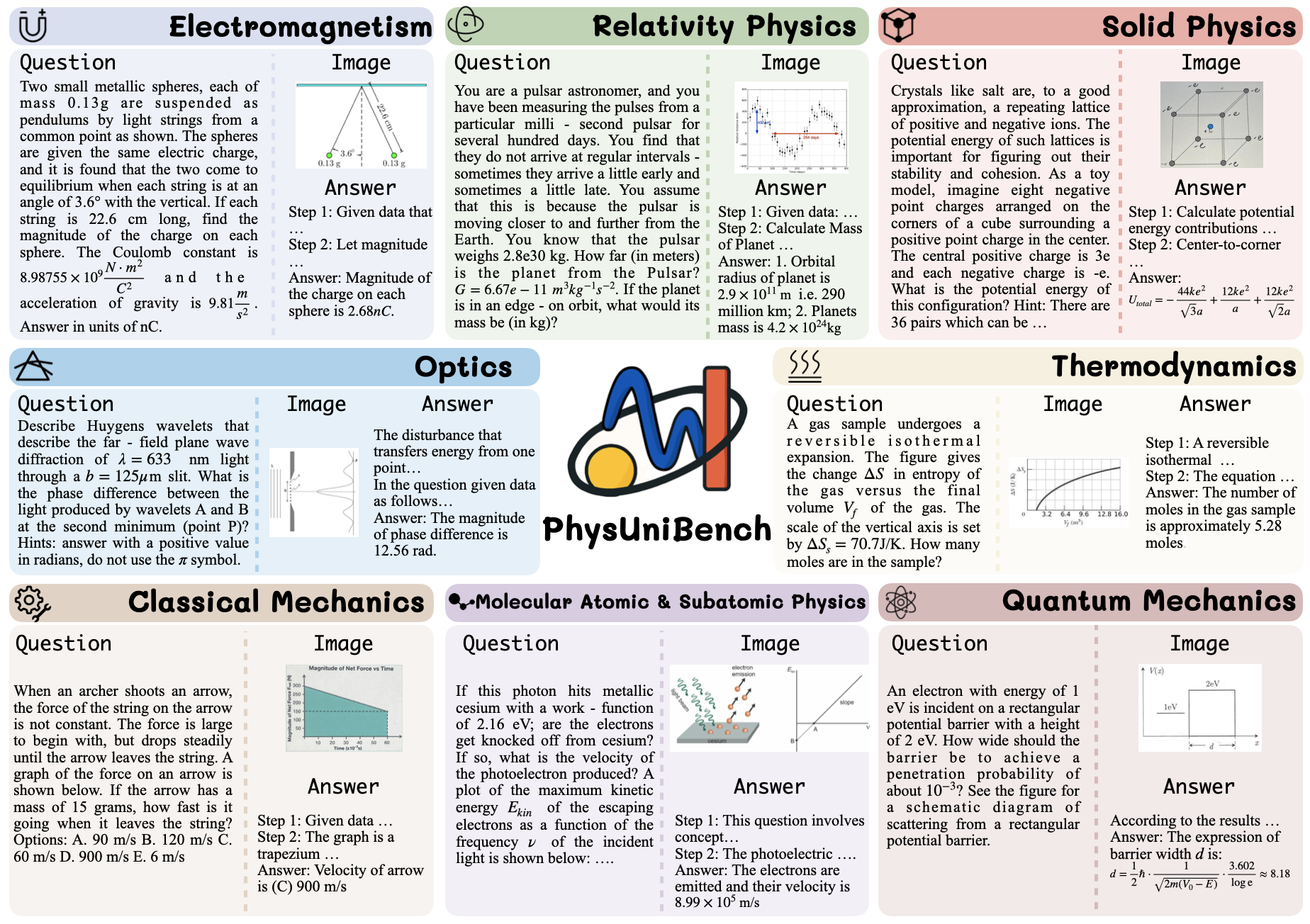

PhysUniBenchmark is an open-source benchmarking tool for multimodal physics problems, hosted on GitHub and developed by PrismaX-Team. It is designed to assess the capabilities of multimodal macromodels when dealing with undergraduate-level physics problems, with a particular focus on complex scenarios that require a combination of conceptual understanding and visual interpretation. The dataset contains diverse physics problems covering a wide range of domains such as mechanics, electromagnetism, and optics, with topics in the form of textual descriptions, formulas, images, and diagrams. The tool provides a standardized testing platform for researchers and developers to help analyze the performance of large models in physics reasoning and multimodal tasks. The project documentation is detailed, easy to access and use, and suitable for academic research and model optimization.

Function List

- Provides large-scale multimodal physics problem datasets covering a wide range of physics disciplines at the undergraduate level.

- Support for standardized evaluation of reasoning capabilities of multimodal macromodels.

- Includes a rich variety of topic types such as words, formulas, images, and diagrams to test general comprehension.

- Open source code and datasets that allow users to download, modify and extend freely.

- Detailed documentation and user guides are provided to support quick start-up.

- Support for generating evaluation reports that analyze the performance of the model in different physical domains.

Using Help

Acquisition and Installation

PhysUniBenchmark is a GitHub-based open source project that users can access and use by following the steps below:

- clone warehouse

Open a terminal and run the following command to clone the project locally:git clone https://github.com/PrismaX-Team/PhysUniBenchmark.gitEnsure that Git is installed; if not, you can get it from the Git website Download and install.

- Installation of dependencies

Go to the project catalog:cd PhysUniBenchmarkThe project depends on a Python environment (Python 3.8 or above is recommended). Install the required dependencies and run:

pip install -r requirements.txtrequirements.txtfile lists all the necessary Python libraries, such as NumPy, Pandas, and Matplotlib. If the file is missing, you can install it manually by referring to the dependency list in the project documentation. - Dataset Download

Datasets are stored in GitHub repositories or external links. Users can access the repository directly from thedatafolder to download, or follow the link in the documentation to access the full dataset. After downloading, unzip the dataset into the specified folder in the project directory (default path isdata/). - Configuration environment

Ensure that the local environment supports multimodal big models (e.g. GPT-4o or other open source models). Users need to configure environment variables or model paths according to the model's API or local deployment requirements. Detailed configuration steps are described in the projectREADME.mdThere is a description in.

Usage Process

The core function of PhysUniBenchmark is to evaluate the performance of multimodal large models on physics problems. Here are the steps to do this:

- Prepare the model

Users need to prepare a large model that supports multimodal inputs (text and images). Common choices include GPT-4o, LLaVA, or other open source models. Make sure the model is deployed and can be called via API or locally. - Load Data Set

The project provides a Python scriptload_data.pyfor loading the dataset. Run the following command:python load_data.py --path data/The script parses the questions in the dataset, including text, formulas, and images, and generates input formats that can be processed by the model.

- Operational assessment

Use the provided evaluation scriptsevaluate.pyTest model performance. Example command:python evaluate.py --model <model_name> --data_path data/ --output results/<model_name>: Specify the model name or API key.--data_path: The path where the dataset is located.--output: Save path for assessment results.

The script automatically feeds questions into the model, collects answers, and generates an assessment report.

- analysis

Once the assessment is complete, the results are saved in theresults/folder in the format of a CSV or JSON file. The report contains accuracy, error analysis and performance statistics of the model in different physical domains (e.g. mechanics, electromagnetism). Users can use thevisualize.pyScripts to generate visual charts:python visualize.py --results results/eval_report.csvCharts include bar charts and line graphs that show the differences in model performance across domains.

Featured Function Operation

- Testing of multimodal problems

Problems in the dataset combine text, formulas, and images. For example, a mechanics problem may contain a textual description of an object's motion, a force diagram, and a velocity-time graph. Users can use thepreprocess.pyThe script preprocesses these inputs to ensure that the model can parse them correctly:python preprocess.py --input data/sample_problem.jsonThe preprocessed data is converted into a format recognizable by the model, such as JSON or embedded vectors.

- Custom extensions

Users can add new questions to the dataset. The question format needs to follow the JSON template in the project documentation, containingquestion(Problem description),image(image path),answer(correct answer) and other fields. After adding them, runvalidate_data.pyValidate the data format:python validate_data.py --input data/new_problem.json - comparative analysis

The project supports testing multiple models at the same time. Users can test multiple models at the same time in theevaluate.pySpecify multiple model names in the script, and the script generates a comparison report showing the differences in the performance of different models on the same problem.

caveat

- Ensure that you have enough storage space locally (datasets can be large, at least 10GB is recommended).

- GPU support may be required for model inference when running evaluations, and NVIDIA GPU-equipped devices are recommended.

- If using a cloud API (such as GPT-4o), make sure the network is stable and configure the correct API key.

application scenario

- academic research

Researchers can use PhysUniBenchmark to test the performance of multimodal macromodels in physical reasoning tasks, analyze model limitations, and provide data to support model improvement. - model development

Developers can use the dataset to optimize the training of multimodal models, especially when dealing with physics-related tasks, and to improve the visual and logical reasoning of the models. - Educational aids

Educators can use the dataset for instructional purposes, to generate test sets of physics problems, to help students understand complex concepts, or to evaluate the performance of AI teaching tools.

QA

- What physics domains are supported by PhysUniBenchmark?

The dataset covers the undergraduate level physics subjects of mechanics, electromagnetism, optics, thermodynamics, and quantum mechanics, and contains a variety of question types. - How do I add a custom question?

Create a question file following the JSON template in the project documentation, containing text, images and answers, and then run thevalidate_data.pyValidate the format. - What hardware support is required?

GPU-equipped devices are recommended to accelerate model inference; CPUs can also run but are slower. At least 16GB of RAM and 10GB of storage. - Are open source models supported?

Supports any multimodal model, such as LLaVA, CLIP, etc. The environment needs to be configured according to the model requirements.