The Google I/O 2025 developer conference showcased numerous technological achievements, including many impressive innovations, highlighting Google's deep accumulation in the field of core AI technology. However, the deluge of information and the slightly confusing way of presenting products also made the outside world have doubts about its overall strategy and market communication ability.

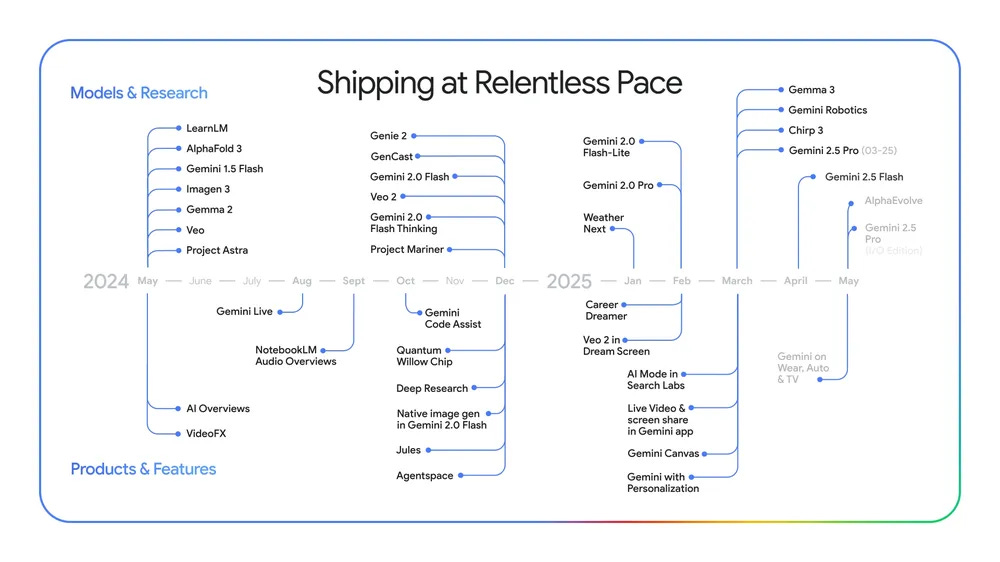

DeepMind employee Logan Kilpatrick has pointed out the remarkable progress Google AI has made since last year: having the world's leading model, more than 400 million monthly users of the Gemini app, and processing more than 100 million data per month. Token Volume of 480T (50x year-over-year growth), more than 7 million developers using the Gemini API (4x growth). These numbers are certainly eye-opening.

However, the official "mind map" provided by Google AI fails to encompass all of the published content, and the "full review" link provided by the CEO ends up pointing to a list of 27 articles, a way of delivering information that raises questions about the effectiveness of its marketing strategy. This type of messaging leaves a question mark over the effectiveness of Google's marketing strategy. Although the market seems to be positive about Google's performance and its stock price has risen, the ambiguity between its product strategy and the market's expectations is still confusing.

As some observers have pointed out, too much information released at the same time tends to make the focus lose focus. Although Google has achieved many breakthroughs in technology, but whether these technologies can be transformed into market competitive products, is still the core challenge it faces. One viewpoint is that Google is launching a series of prototypes that are not yet perfect, and then optimize them when the technology matures, which is not a bad strategy in itself. The key lies in how to ensure that users know and understand the value of these products.

Core highlights of the launch and initial interpretation

Google's launch event was packed with content, covering the following areas:

- Veo 3: Generates 8-second high-quality videos with voice and sound effects.

- Flow: Aims to string together Veo 3's short videos into longer content, but it's not perfect yet.

- Gmail and related application integration: Provide a wider range of context-aware and intelligent assistance functions.

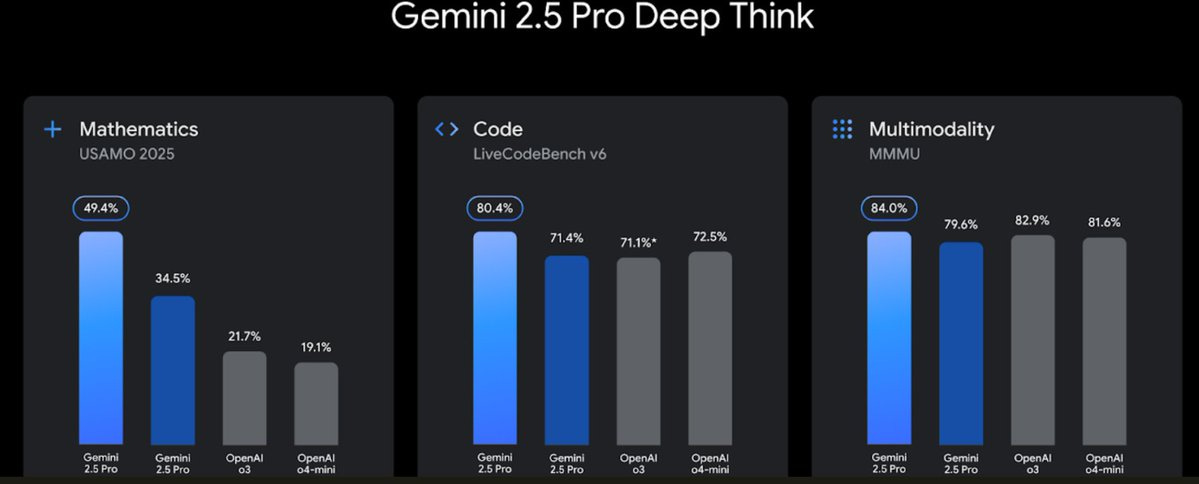

- Gemini 2.5 Flash and Gemini 2.5 Pro Deep Thinking: A new generation of models with improved performance.

- Gemma 3m: An open source model that runs on phones with 2GB of RAM.

- Gemini Diffusion: a novel textual model with great potential but still in need of polishing.

- Jules: AI programming assistant that benchmarks Codex for free.

- Agent Mode: A "full proxy" model will be introduced in several scenarios.

- Chrome Integration with Gemini: You can use the browser's open tabs as context.

- AI Search: It is free to all users and will include an agent model and a specialized shopping model in the future.

- real-time speech translation: Smoothly translates and imitates the speaker's tone of voice.

- Google Beam: a 3D real-time communication technology.

- Android XR Demo: A demonstration of the future direction, but still some time away from practical application.

- Google Live Experience: Provides augmented reality interaction through the cell phone camera.

- AI Premium Subscription Service: $250 per month.

Some of these products and features are already live and some are still months away. The situation is quite complex with free and paid co-existing with varying levels of maturity.

Innovations and Challenges in Generative Media: Flow, Veo 3 and Imagen 4

The one that gets all the attention is the Veo 3It is capable of generating videos with native audio with stunning results. The image generation model has also been upgraded to Imagen 4, support for up to 2K resolution, and improved detail control, although its glow is somewhat overshadowed by video generation.

Google CEO Sundar Pichai says Veo 3 represents the top of the video generation model. To serve filmmakers and creatives, Google has combined the best of Veo, Imagen, and Gemini into a new model called Flow has been made available to Google AI Pro and Ultra subscribers in its new movie-making tool.

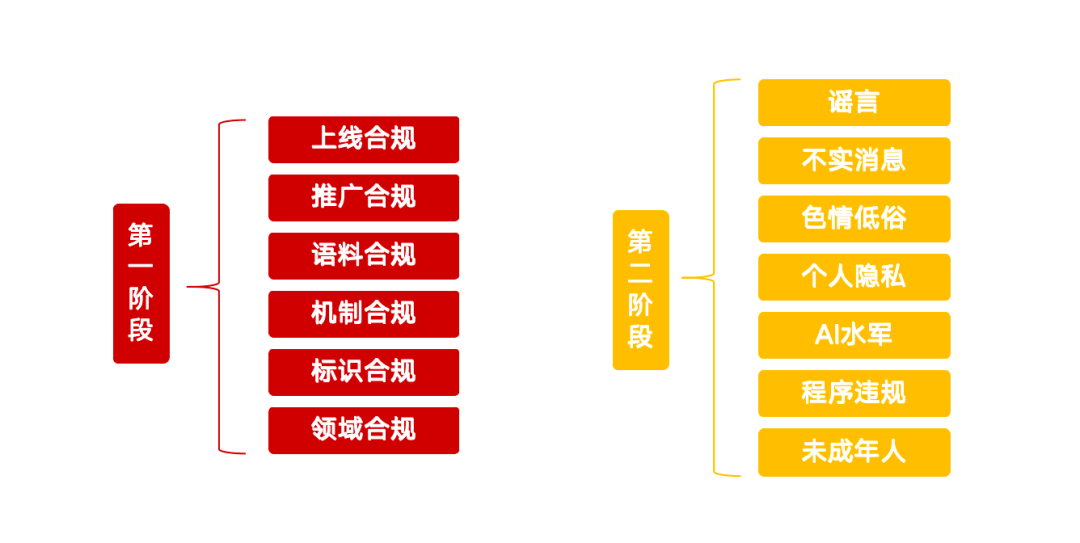

Users have shown a great deal of enthusiasm for Veo 3, such as Bayram Annakov's demonstration of the "man waking up in a cold sweat" clip, and Google's sharing of a user's expansion of a video of an eagle carrying a car. While users such as Pliny have generated some of the restricted content through some "jailbreaking" tactics, this has led to a discussion about the boundaries of content censorship: why should some creative PG-13 content need to be "jailbroken" to be realized?

The combination of Flow and Veo 3 provides the first taste of the practical potential of AI video generation. Its coherence, rich toolset, and the addition of sound effects herald new possibilities, and the collaboration between DeepMind and Primordial Soup Labs may give rise to a truly rewarding body of short films.

Additionally, Google mentioned a Lyria 2-powered music sandbox and introduced SynthID Detector, a tool for detecting AI-generated content.Meanwhile, Google Vids, which converts slideshows to video and the AI avatar feature within it, raises some doubts about its usefulness and necessity.The Stitch tool, which is claimed to be able to generate designs and user interfaces from text prompts.

Gmail Integration: the long-awaited smart leap?

Sundar Pichai has announced that the personalized smart reply feature in Gmail, which allows Gemini to access a user's messages in Google apps and compose emails in the user's voice, will be rolling out to subscribers soon.

For a long time, users have had high expectations for Gmail to become truly intelligent, such as more accurate calendar filling, key message extraction and reminder, automatic sorting and filtering of emails, etc. Google's proposed "inbox cleanup" features, such as "delete all unread emails from The Groomed Paw in the past year", are just the first step. Groomed Paw in the past year" is just the first step. More advanced requirements, such as "Set up AI filters to stop showing emails from The Groomed Paw unless they contain urgent messages or discounts of 50% or more" or "Alert me when Sarah replies to whether she's going to an appointment on Friday", are still to be realized. ", are still to be implemented.

Quick Appointment Scheduling's integration with Gmail would undoubtedly be a huge step forward if it truly understood user preferences and adapted to existing schedules. As for AI ghostwriting emails, it may be useful in specific scenarios (e.g., when formal, professional, or courteous expression is required), but detailing is critical.

The Gemini Model Family: Continuing Evolution

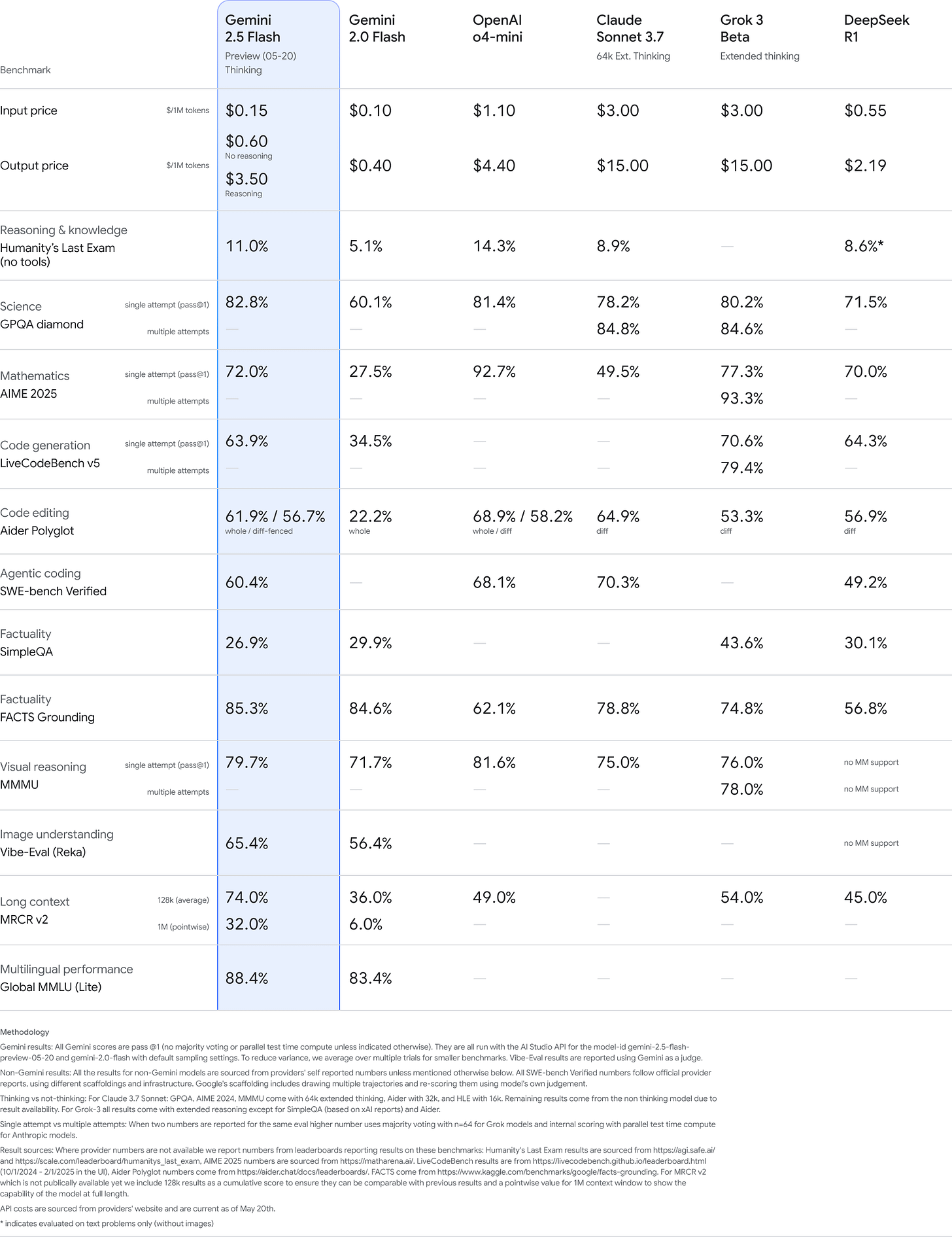

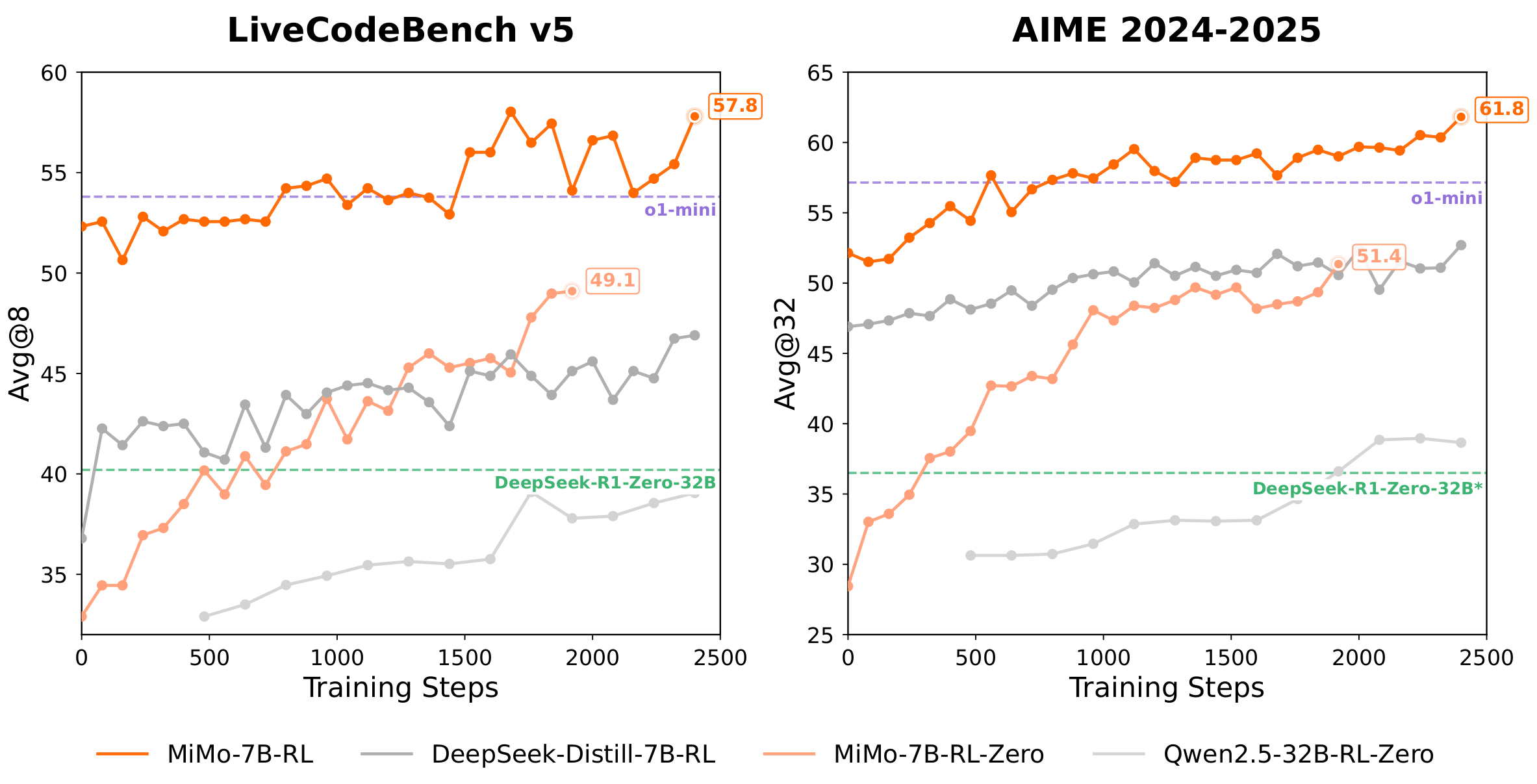

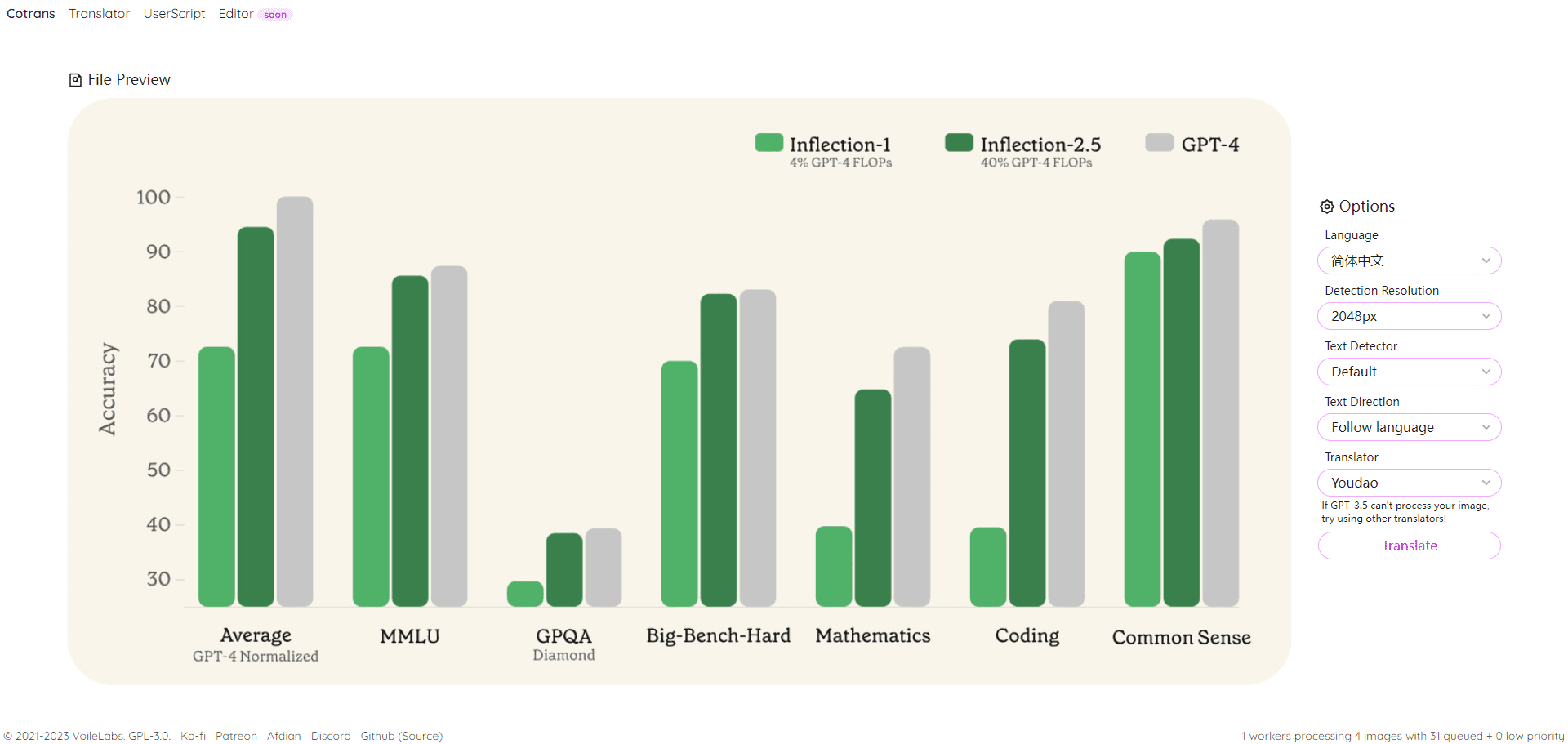

While the focus of this release is not on the models themselves, there are still incremental updates to the Gemini line.Gemini 2.5 Flash has become widely available and is considered one of the best performing fast, low-cost models available. developers such as Pliny have even shared ways to work around its limitations.

Sundar Pichai mentioned that Gemini 2.5 Flash has improvements in inference, multimodality, code and long contexts. Meanwhile.Gemini 2.5 Pro (used form a nominal expression) Deep Think The model is also open to trusted testers, and Demis Hassabis praises the Gemini 2.5 Flash for its speed and low cost. Looking at the charts, the Gemini 2.5 Pro Deep Thinking (light blue) outperforms the regular Gemini 2.5 Pro (dark blue) in a number of benchmarks, although the nomenclature is slightly confusing.

Gemini 2.5 Flash is performing well on the Arena charts, second only to Gemini 2.5 Pro, and some users are even saying that the new Gemini 2.5 Flash outperforms the current Gemini 2.5 Pro in Gemini apps. The Live API will also support audio and visual inputs as well as native audio outputs, with control over tone of voice, accent, and style, Google has also released a white paper on Gemini security.

Gemma 3n: advances in end-side modeling

Gemma 3n Achieves significant performance improvements in Google's end-side open-source model with an architecture optimized for mobile devices, support for multimodal inputs (video, audio, text, images), and multiple sizes such as 4B and 2B. Its inference is faster than Gemma 3 4B is 1.5x faster. Through Google DeepMind's Per-Layer Embeddings (PLE) technology, Gemma 3n dramatically reduces the RAM footprint, allowing models with 5B and 8B parameters to run on mobile devices with a memory overhead close to that of 2B and 4B models (just 2GB and 3GB of dynamic memory). In addition, Google has introduced MedGemma for healthcare, SignGemma for sign language, and DolphinGemma for communicating with dolphins.

Gemini Diffusion: a new paradigm for text generation?

Gemini Diffusion As a text diffusion model, it is understated but potentially significant. It is said to generate up to 2,000 tokens/second, and has shown good capabilities for tasks such as OCR correction. Interestingly, some of the "jailbreak" hints for Gemini 2.5 also seem to work for this diffusion model.

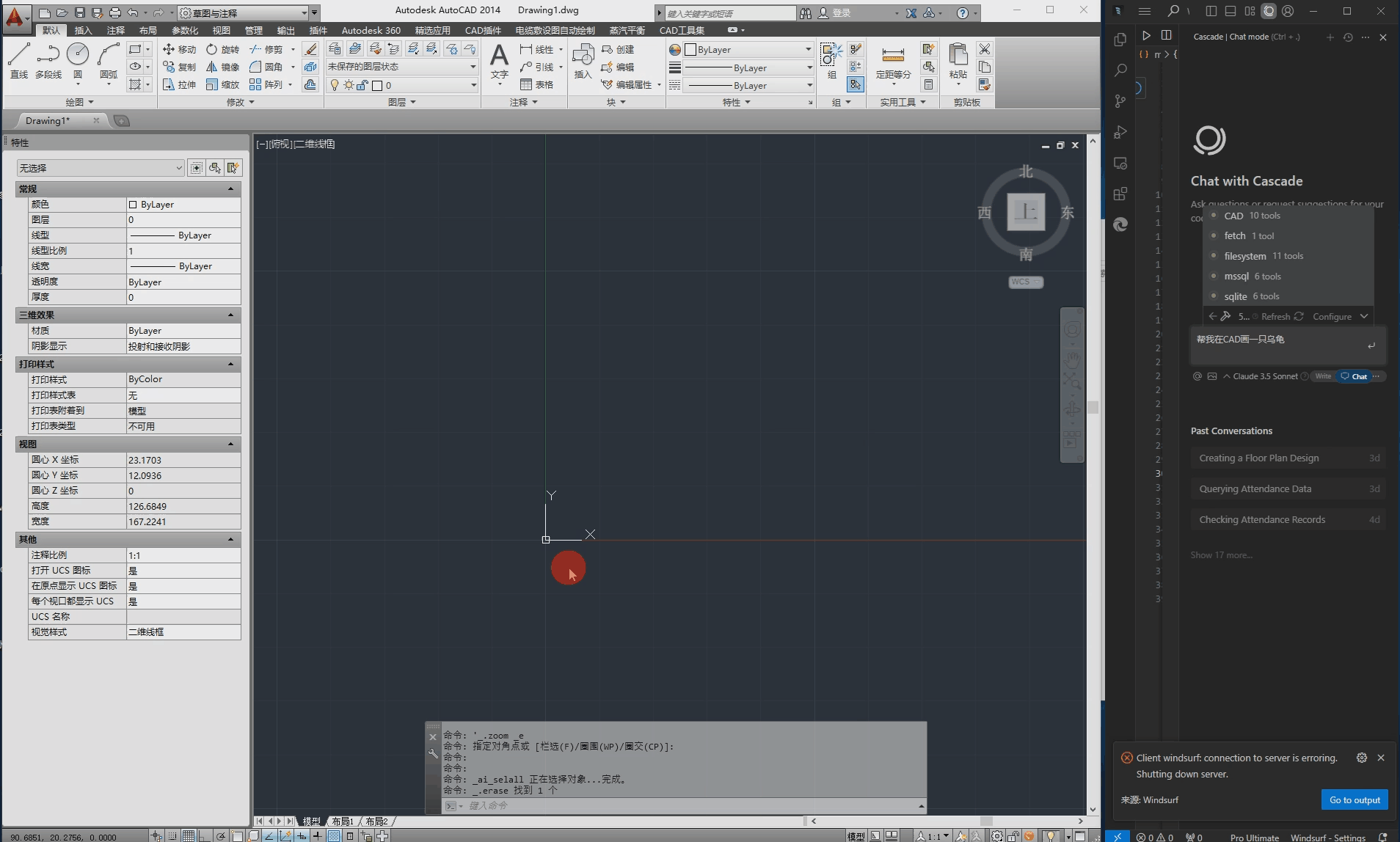

Jules: a free AI programming assistant

Google releases its AI programming agent Jules, with context-aware, code repository integration capabilities designed to help developers deliver functionality. Its user interface looks quite advantageous in the demo video. Most strikingly, Jules is currently available for free, although it may initially suffer from delays due to high concurrency. Its real-world performance and comparisons with competitors such as OpenAI Codex remain to be tested in the market.

Deep Research and NotebookLM

Deep Research Will soon allow connection to Google Drive and Gmail, selection of specific sources, and integration with Canvas, which is extremely valuable for research scenarios that require deep utilization of personal contextual information.NotebookLM has also launched a standalone app, which has been well received.

Google Search "AI mode": reinventing the search experience

Google Search's AI Overviews have long been criticized for their occasional low-level errors. With the addition of Gemini 2.5, its performance is expected to improve. The newly launched AI Mode purportedly different from Overviews, but its specific differences from the Gemini app, and from the Perplexity AI Google does not clearly explain the differences between products such as

Sundar Pichai said AI Mode will be rolled out to all users in the U.S. and is a complete refactoring of search to support longer, more complex queries.AI Overviews currently serves 1.5 billion users per month across more than 200 countries and territories.AI Mode's core strengths are likely to lie in better integration of real-time messaging systems (especially in common scenarios such as shopping) and the The ability to quickly perform multiple Google searches to generate context, and it's available for free.

In the future, AI Mode plans to integrate with "Project Mariner" or "Agent Mode" and offer a "deep search" option, initially focusing on scenarios such as ticketing, restaurant reservations and local bookings. Initially, it will focus on ticketing, restaurant reservations, and local bookings. This strategy of entering the agent function from specific, controlled scenarios may be a more prudent approach at this stage. If executed correctly, Google Search AI Mode is expected to be the most useful entry point for many AI tasks. However, potential internal competition and overlapping responsibilities between different AI teams (AI Search, Gemini, Overviews) are potential concerns.

AI shopping: smarter consumer decisions

A key offshoot of Google Search AI Mode, the AI Shopping feature (launching in the coming months) allows users to search multiple e-commerce sites at once in an AI-powered way, providing visual results and supporting follow-up follow-up questions. It also tracks prices and automates purchases when the right price point is reached. Virtual try-on functionality is also available in Search Labs.

Agent Mode: The Quest for Autonomous Intelligence

Sundar Pichai has announced that Agent Mode in the Gemini app, which will help users do more tasks on the web, will soon be available to subscribers.A multitasking version of Project Mariner is already available to Google AI Ultra subscribers in the U.S., and computer-use capabilities will be added to the Gemini APIs.Agent One of the highlights of Mode is the "Teach and Repeat" feature, whereby after a user performs a task once, the AI learns and handles a similar task on their behalf. However, early reports suggest that Project Mariner is still immature, and still fails at simple tasks, for example.

Project Astra / Google Live: real-time visual interaction

Users can now use the feature for free on Android and iOS devices to share live camera footage and interact with Gemini by voice, allowing Gemini to perform Google searches, play YouTube videos, and even make phone calls on their behalf. the exact definition of Project Astra is a little vague, and could be a proxy for Gemini in live video mode, or specifically Google Live. The exact definition of Project Astra seems a bit vague, as it could be a stand-in for Gemini in real-time video mode, or it could refer specifically to Google Live, the official video of which demonstrates its "intelligence in action" for YouTube searches, Gmail integration, and making phone calls to inquire about inventory, etc. The technology is also being integrated into search. The technology is also being integrated into the search function, which generates results by pointing the camera at an object and asking a question.

Android XR glasses: a vision of the future

The Android XR glasses aim to unlock deeper interactions by allowing the screen to "see" what the user sees. Despite the futuristic nature of the demo, it's not expected to be available until 2026 at the earliest, and the price is unknown. From the demo, its current form looks more like a product that's cool in theory, but may not be as good in practice, with the main application scenarios likely to remain limited to Google Live and AI chat.

Gemini in Chrome: Leveraging Open Tab Contexts

Chrome has a new feature that allows Gemini to not only analyze the current page, but also ask questions about all open tabs as context. This is a useful feature, but one that will take some getting used to.

Google Meet Real-time Translation: Crossing the Language Barrier

The real-time voice translation feature in Google Meet, which purportedly matches the user's tone of voice and speed of speech for smooth cross-language conversations, has been rolled out to subscribers. While the demo was convincing, the actual results have yet to be tested. The feature was demonstrated alongside Google Beam, a 3D conferencing platform, but the two are not directly related.

Google Beam: Expensive 3D "Reality" Communication

Google Beam, which originated from Project Starline, aims to convert 2D video streams into realistic 3D experiences with a new video model that supports millimeter-level head tracking and 60fps real-time rendering. This requires specialized equipment (allegedly based on six cameras), which could initially cost between $15,000 and $30,000 per unit, supplied by HP. The need for and practicality of 3D videoconferencing, which seeks the ultimate in "realism," has been questioned. For most meeting scenarios, 2D is sufficient or even better. The potential market is likely to be more for shared VR spaces, gaming or specific movie viewing experiences rather than everyday meetings.

The popularity and growth of AI

Sundar Pichai revealed that Google AI usage is growing rapidly. A year ago it was processing 9.7 trillion Tokens per month, today it is 480 trillion, a 50-fold increase.

The Gemini app has 400 million monthly users and has seen usage grow by 45% in the Gemini 2.5 era. despite the fact that it is not the same as the ChatGPT is still short of the 1.5 billion monthly active users, but growth is strong.

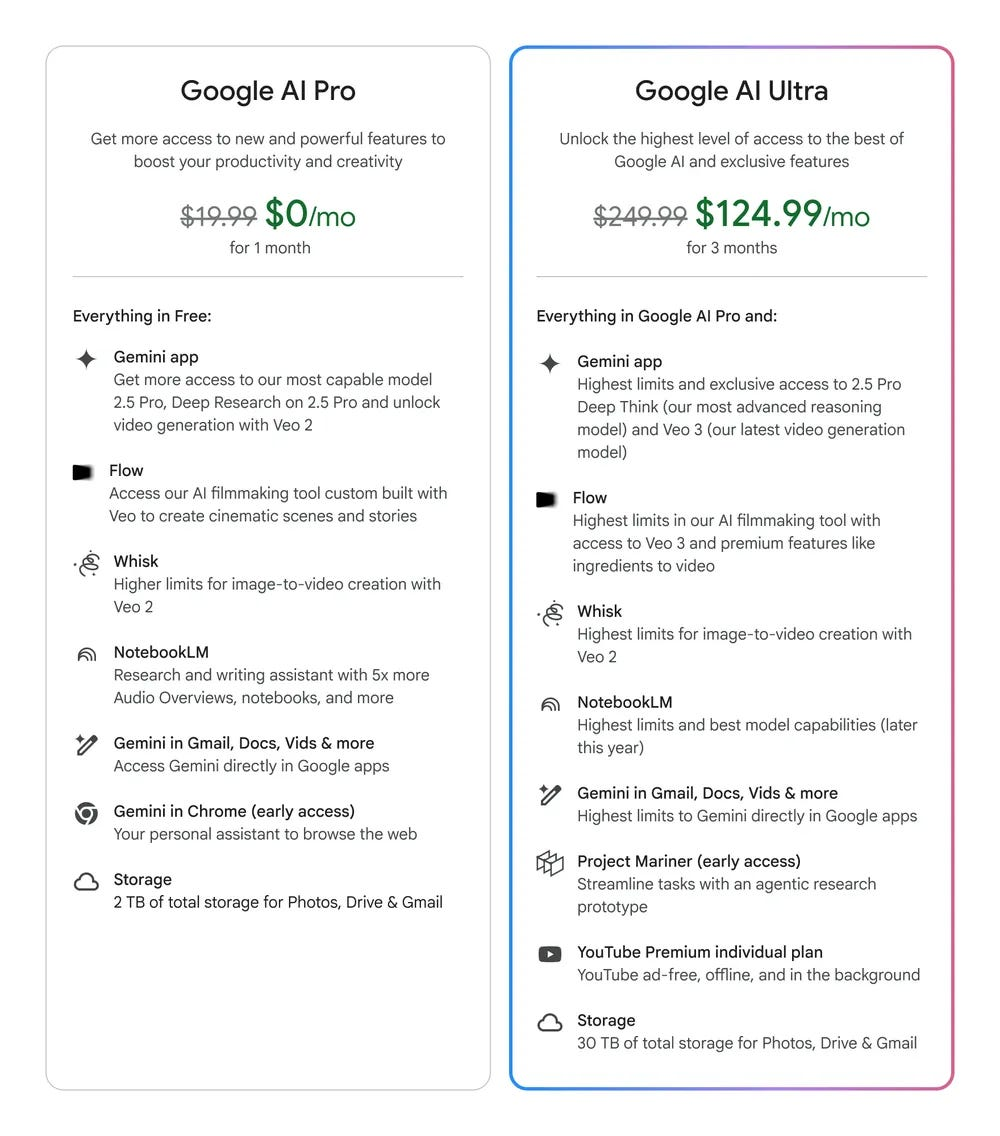

Pricing strategy: free and paid in parallel

Google's AI service will continue its strategy of running free and paid in tandem. the AI Search and Gemini apps are free for basic features, but premium features require a fee. the Pro plan is $20 per month, and the Ultra plan is $250 per month, with the latter offering a sneak peek at new features (including Agent Mode) and a higher rate limit.

Bundling services like YouTube Premium into a subscription package is considered a smart move, in line with the "Google Prime"-style meta-subscription trend, and the $250 Ultra plan is not a good value for the average user. For most users, the Pro plan will suffice, while the $250 Ultra plan, with its additional value in the form of rate limiting and early access, won't offer much value for the average user. But for those who can take full advantage of its advanced features, the value may far exceed the subscription cost. For example, if you're focused on video generation, the Ultra plan offers 12,000 credits per month, with each 8-second Veo 3 video costing 150 credits, or $0.39 per second. Buying points directly is cheaper, at about $0.19 per second. However, the number of iterations required to generate satisfactory results is a key factor in the actual cost.

Thinking Behind the Technology

Google I/O 2025 demonstrated its determination to push forward across the board on all fronts of AI, and the underlying models performed well. However, the product lines appear fragmented and the overall vision is not yet clear, but the potential is huge. Some commentators have suggested that Google DeepMind's products could be seen as prototypes for general artificial intelligence (AGI) if it builds a unified, robust agent user interface and optimizes system cues.

Demis Hassabis confirmed that the vision is to make the Gemini app a general-purpose AI assistant, integrating Google Live's real-time vision and Project Mariner's parallel agent capabilities.

Analysts like Ben Thompson believe that Google's core products are still search and cloud services. This view is not unreasonable, but other AI products have the same potential for success after iteration and optimization. A key issue is that effective use of AI requires active thinking and exploration on the part of the user, which is a threshold for users who are used to passively receiving information. Devices such as Android and future XR glasses may be important vehicles for promoting these capabilities. In this context, there is still room for startups to build AI software products that solve specific problems.

Google has done a great job of improving search, but it remains to be seen if it can translate its strong modeling capabilities into other equally great products. This is both a challenge and an opportunity.