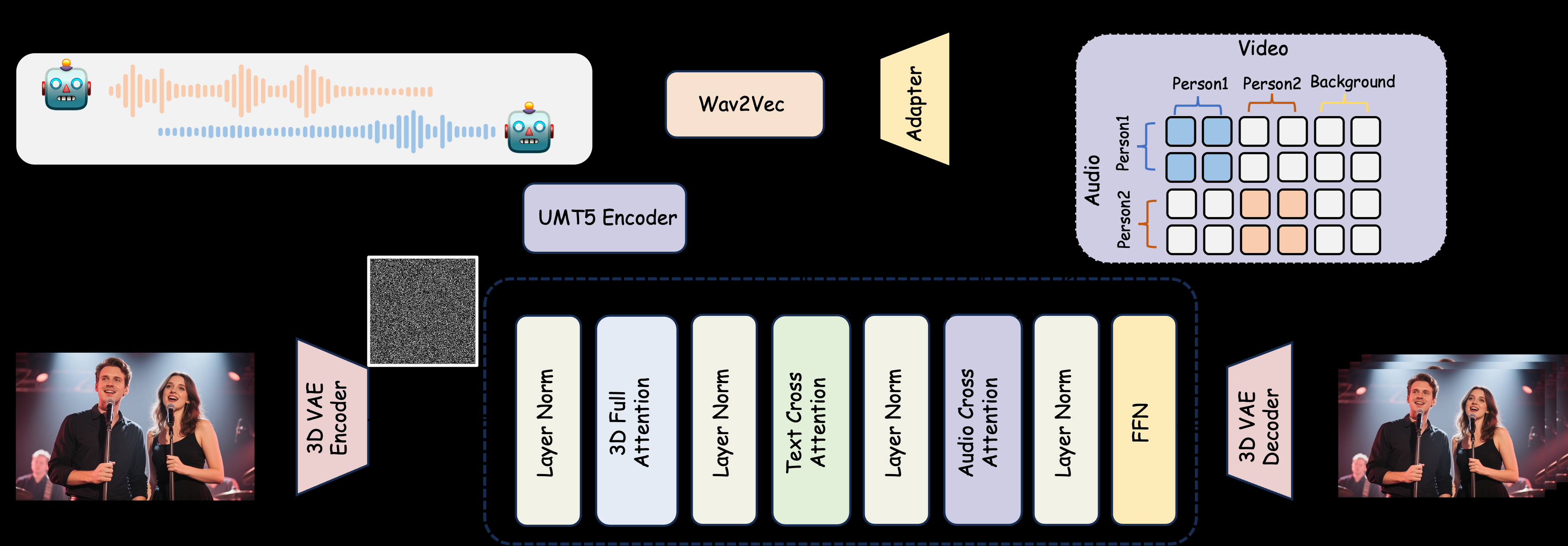

MultiTalk is an open source audio-driven multiplayer dialog video generation tool developed by MeiGen-AI. It generates lip-synchronized multiplayer interactive videos by inputting multiple audio channels, reference images and text prompts. The project supports video generation of real and cartoon characters, and is suitable for dialog, singing, and interaction control scenarios etc. MultiTalk adopts the innovative L-RoPE technology to solve the problem of audio and character binding, and ensures that the lip movements are accurately aligned with the audio. The project provides model weights and detailed documentation on GitHub under the Apache 2.0 license, and is suitable for academic research and technology developers.

Function List

- Support multi-person conversation video generation: Based on multiple audio inputs, generate videos of multiple characters interacting with each other, with lip movements synchronized with the audio.

- Cartoon Character Generation: Support generating cartoon characters' dialogues or singing videos to extend the application scenarios.

- Interaction Control: Control the character's behavior and interaction logic through text prompts.

- Resolution Flexibility: Supports 480p and 720p video output, adapting to the needs of different devices.

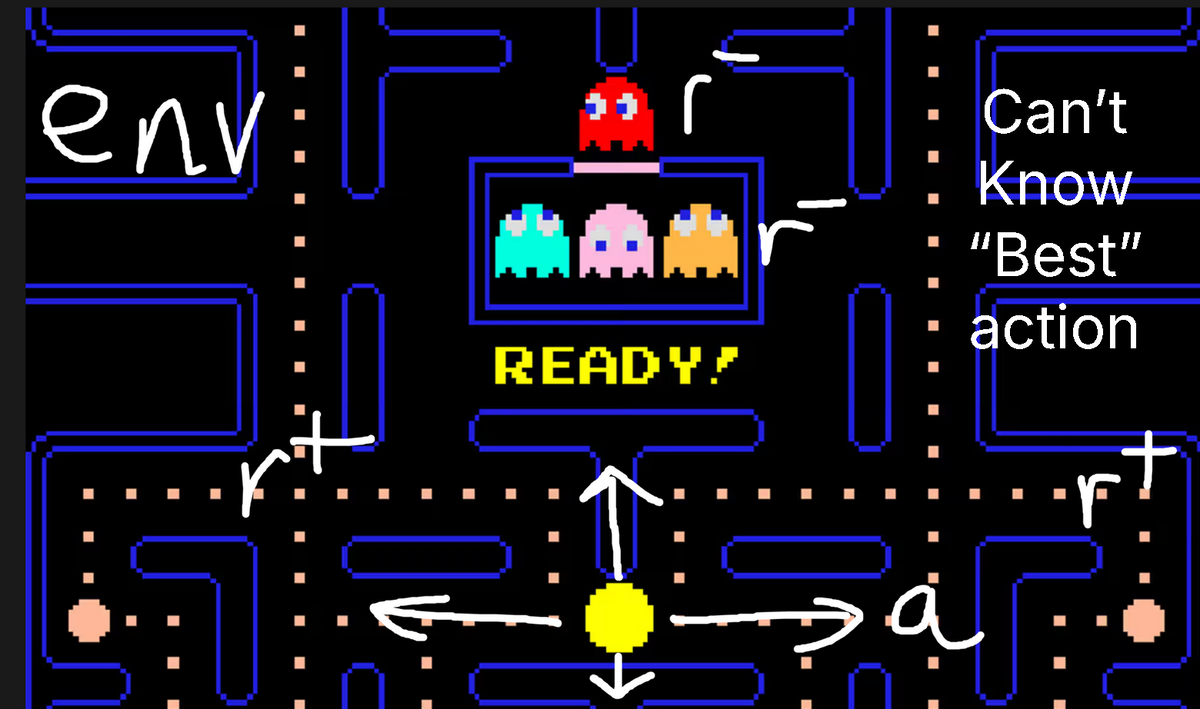

- L-RoPE Technology: Solve the problem of multiple audio and character binding through label rotation position embedding to improve the generation accuracy.

- TeaCache Acceleration: Optimizes video generation speed for low video memory devices.

- Open source models: provide model weights and code that developers can freely download and customize.

Using Help

Installation process

To use MultiTalk, you need to configure the runtime environment locally. Below are detailed installation steps for Python developers or researchers:

- Creating a Virtual Environment

Create a Python 3.10 environment using Conda to ensure dependency isolation:conda create -n multitalk python=3.10 conda activate multitalk - Install PyTorch and related dependencies

Install PyTorch 2.4.1 and its companion libraries to support CUDA acceleration:pip install torch==2.4.1 torchvision==0.19.1 torchaudio==2.4.1 --index-url https://download.pytorch.org/whl/cu121 pip install -U xformers==0.0.28 --index-url https://download.pytorch.org/whl/cu121 - Installing additional dependencies

Install the necessary libraries such as Ninja and Librosa:pip install ninja psutil packaging flash_attn conda install -c conda-forge librosa pip install -r requirements.txt - Download model weights

Download MultiTalk and associated model weights from Hugging Face:huggingface-cli download Wan-AI/Wan2.1-I2V-14B-480P --local-dir ./weights/Wan2.1-I2V-14B-480P huggingface-cli download TencentGameMate/chinese-wav2vec2-base --local-dir ./weights/chinese-wav2vec2-base huggingface-cli download MeiGen-AI/MeiGen-MultiTalk --local-dir ./weights/MeiGen-MultiTalk - Verification Environment

Ensure that all dependencies are installed correctly and check that the GPU is available (CUDA-compatible GPUs are recommended).

Usage

MultiTalk via command line script generate_multitalk.py Generate video. The user needs to prepare the following inputs:

- Multi-Channel Audio: Support for WAV format audio files ensures that each channel of audio corresponds to a character's voice.

- reference image: Provides a still image of the character's appearance, which is used to generate the character in the video.

- text alert: Text that describes a scene or character interaction, e.g., "Nick and Judy are having a conversation in a coffee shop."

Generate short videos

Run the following command to generate a single short video:

python generate_multitalk.py \

--ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir weights/chinese-wav2vec2-base \

--input_json examples/single_example_1.json \

--sample_steps 40 \

--mode clip \

--size multitalk-480 \

--use_teacache \

--save_file output_short_video

Raw Growth Video

For long videos, use streaming generation mode:

python generate_multitalk.py \

--ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir weights/chinese-wav2vec2-base \

--input_json examples/single_example_1.json \

--sample_steps 40 \

--mode streaming \

--use_teacache \

--save_file output_long_video

Low memory optimization

If there is not enough video memory, set --num_persistent_param_in_dit 0::

python generate_multitalk.py \

--ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir weights/chinese-wav2vec2-base \

--input_json examples/single_example_1.json \

--sample_steps 40 \

--mode streaming \

--num_persistent_param_in_dit 0 \

--use_teacache \

--save_file output_lowvram_video

Parameter description

--mode::clipgenerating short videos.streamingRaw Growth Video.--size: Selectionmultitalk-480maybemultitalk-720Output Resolution.--use_teacache: Enable TeaCache acceleration to optimize generation speed.--teacache_thresh: Values of 0.2 to 0.5 balance speed and mass.

Featured Function Operation

- Multiplayer dialog generation

The user is required to prepare multiple audio tracks and corresponding reference images. The audio files should be clear, with a recommended sampling rate of 16kHz, and the reference images should contain facial or full-body features of the characters. The text cue should clearly describe the scene and the character's behavior, e.g. "Two people discussing work at an outdoor coffee table". Once generated, MultiTalk uses L-RoPE technology to ensure that each audio channel is tied to the corresponding character and that lip movements are synchronized with the voice. - Cartoon Character Support

Providing reference images of cartoon characters (such as Disney-style Nick and Judy), MultiTalk generates cartoon-style dialog or singing videos. Example prompt: "Nick and Judy singing in a cozy room". - interactive control

Control your character's actions with text prompts. For example, type "woman drinking coffee, man looking at cell phone" and MultiTalk will generate a dynamic scene. The prompts should be concise but specific, avoiding vague descriptions. - Resolution Selection

utilization--size multitalk-720Generates HD video suitable for display on high quality display devices. Low resolution 480p Ideal for quick tests or low performance equipment.

caveat

- hardware requirement: CUDA-equipped GPUs with at least 12GB of RAM are recommended; low RAM devices require optimization parameters to be enabled.

- audio quality: The audio needs to be free of noticeable noise to ensure a lip-synchronized effect.

- License restrictions: The generated content is for academic use only and commercial use is prohibited.

application scenario

- academic research

Researchers can use MultiTalk to explore audio-driven video generation techniques and test the effectiveness of innovative approaches such as L-RoPE in multi-character scenarios. - Educational Demonstrations

Teachers can generate cartoon character dialog videos for classroom teaching or online courses to add fun and interactivity. - Virtual Content Creation

Content creators can quickly generate multiplayer conversations or singing videos for short video platforms or virtual character presentations. - technology development

Based on MultiTalk's open source code, developers can customize scenario-specific video generation tools for virtual meetings or digital people projects.

QA

- What audio formats does MultiTalk support?

Supports WAV format audio with a recommended sampling rate of 16kHz to ensure optimal lip synchronization. - How do I fix an audio to character binding error?

MultiTalk uses L-RoPE technology to automatically resolve binding issues by embedding the same labels for both audio and video. It ensures that the incoming audio and reference image correspond to each other. - How long does it take to generate a video?

Depends on hardware and video length. Short videos (10 seconds) take about 1-2 minutes on a high-performance GPU, and longer videos can take 5-10 minutes. - Does it support real-time generation?

The current version does not support real-time generation and requires offline processing. Future versions may optimize low latency generation. - How to optimize the performance of low graphics memory devices?

utilization--num_persistent_param_in_dit 0cap (a poem)--use_teacache, lowering the video memory footprint.