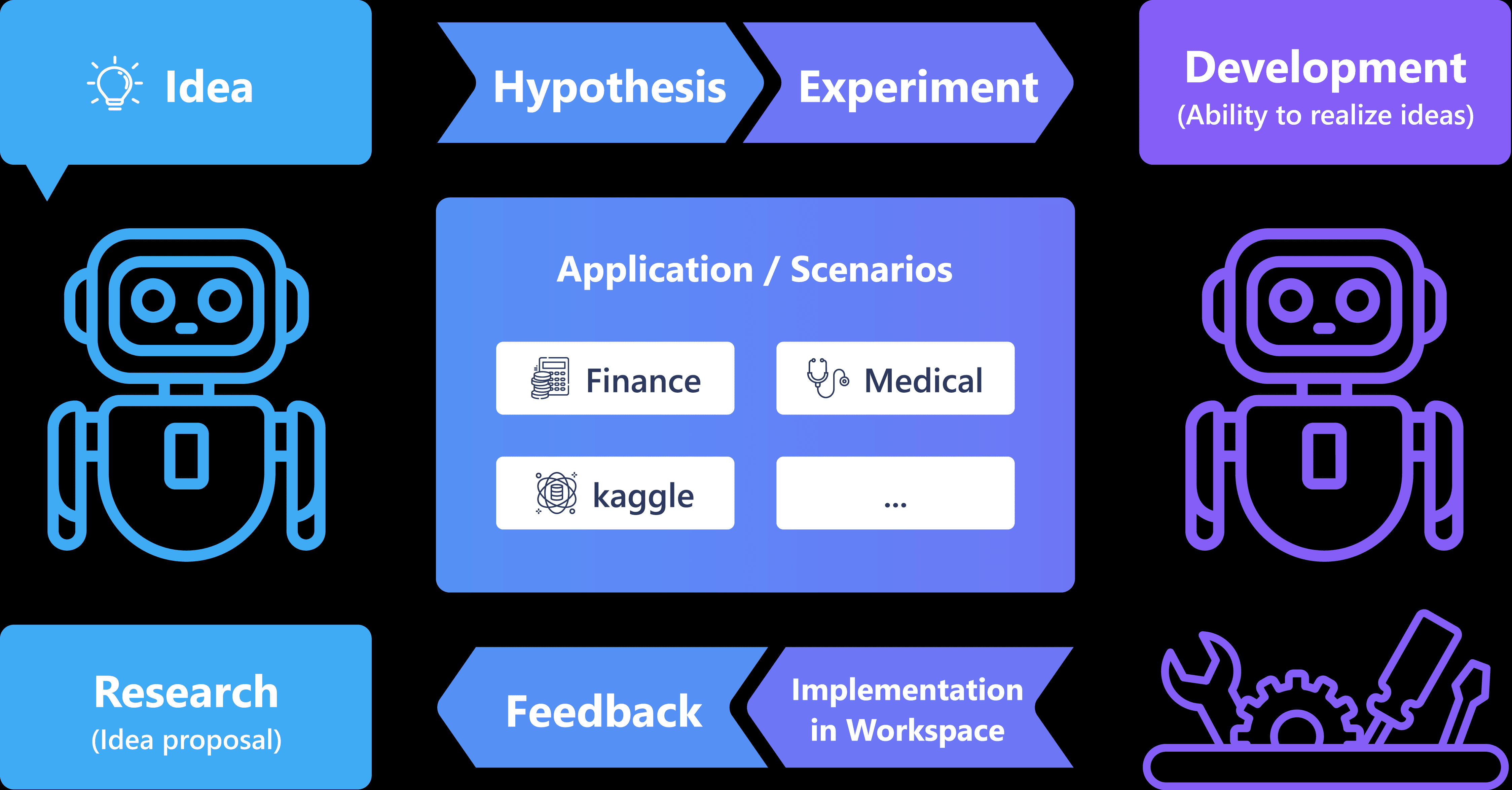

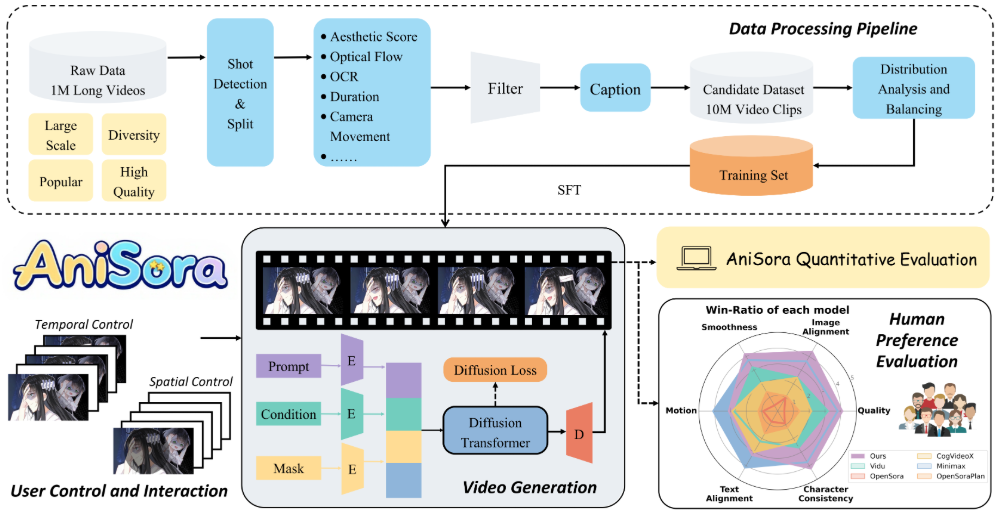

Index-AniSora is an anime video generation model developed and open-sourced by Bilibili and hosted on GitHub. It uses CogVideoX-5B and Wan2.1-14B as the base model, and supports the generation of diverse anime style videos, including anime episodes, domestic original animation, manga adaptations, VTuber content, anime PVs and spoof style videos. The project combines the results of papers accepted at IJCAI'25, provides complete training and inference code, and supports Huawei's Rise 910B NPU hardware.Index-AniSora implements image-to-video generation, frame interpolation, and locally guided animation with an ultra-tens of millions of high-quality datasets and a space-time mask module. Its evaluation dataset contains 948 animated video clips, paired with Qwen-VL2-generated text cues to ensure precise alignment of text to video content. The project is fully open source under the Apache 2.0 license, and community participation in development is encouraged.

Function List

- Support generating diverse anime style videos, covering anime episodes, manga adaptations, VTuber content, etc.

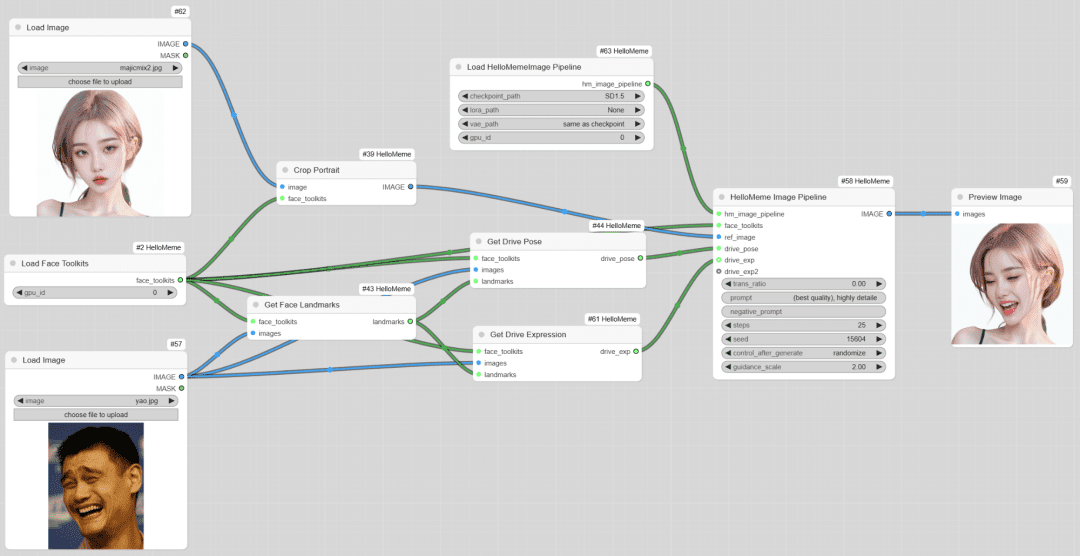

- Provides image-to-video generation function to generate dynamic video based on a single image.

- Support frame interpolation to generate keyframes, middle frames and end frames to optimize animation smoothness.

- Provides localized image-guided animation functionality, allowing user-specified areas to generate animations.

- Evaluation dataset containing 948 animated video clips paired with manually corrected text prompts.

- Supports Huawei Rise 910B NPU to optimize training and inference performance.

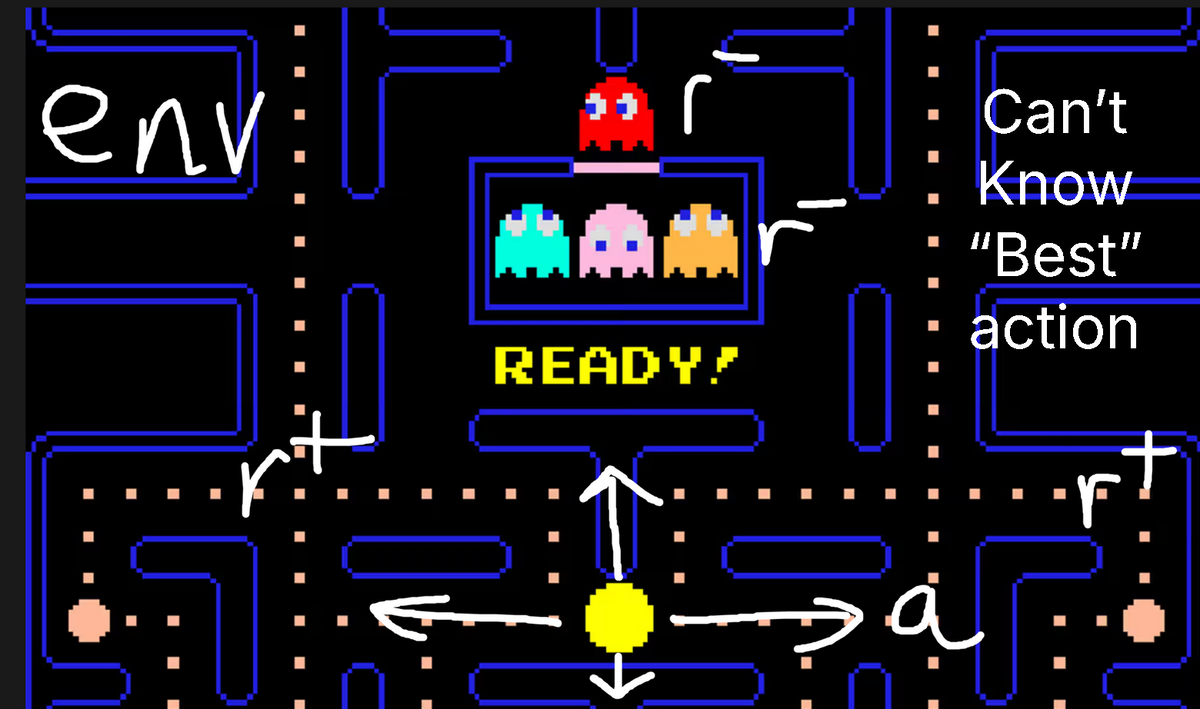

- Provide Reinforcement Learning Optimization Framework (RLHF) to improve the quality of anime style output.

- Publish the full training and inference code at path

anisoraV1_train_npucap (a poem)anisoraV2_npuThe - Provides distillation-accelerated inference techniques to reduce computational cost and maintain generation quality.

Using Help

Installation process

Index-AniSora is an open source project hosted on GitHub and users need to clone the repository and configure the environment to use its features. The following are detailed installation and usage steps:

- clone warehouse

Run the following command in a terminal to clone the Index-AniSora repository:git clone https://github.com/bilibili/Index-anisora.git cd Index-anisora

- Environment Configuration

The project relies on the Python environment and a specific deep learning framework. Python 3.8 or higher is recommended. Install the dependencies:pip install -r requirements.txtEnsure that PyTorch (which supports Rise NPUs or GPUs), CogVideoX, and Wan2.1 dependencies are installed. If you are using Huawei Rise 910B NPU, you need to install the Rise development kit (such as CANN). Refer to the official documentation or Huawei Rise community for specific installation instructions.

- Download model weights

Users need to apply for AniSoraV1 or AniSoraV2 model weights. Fill in the officially provided application form (PDF format) and send it to the email addressyangsiqian@bilibili.commaybexubaohan@bilibili.com. After the review, get the weights download link. After downloading, place the weights file in the specified directory of the repository (e.g.anisoraV2_npu). - Run the inference code

The project provides a complete inference code locatedanisoraV1_train_npumaybeanisoraV2_npuCatalog. Run the sample inference command:python inference.py --model_path anisoraV2_npu --prompt "一个穿和服的少女在樱花树下跳舞" --output output_video.mp4Parameter Description:

--model_path: Specifies the model weight path.--prompt: Enter a text prompt describing the desired animation scene.--output: Specifies the output video file path.

- training model

If customized training is required, the project provides the training code (anisoraV1_train_npu). After preparing the dataset, run the training script:python train.py --data_path your_dataset --output_dir trained_modelThe dataset needs to meet the project requirements, and it is recommended to use animated video clips similar to the evaluation dataset with text prompts. The training supports Huawei Rise NPU and GPU.

Main Functions

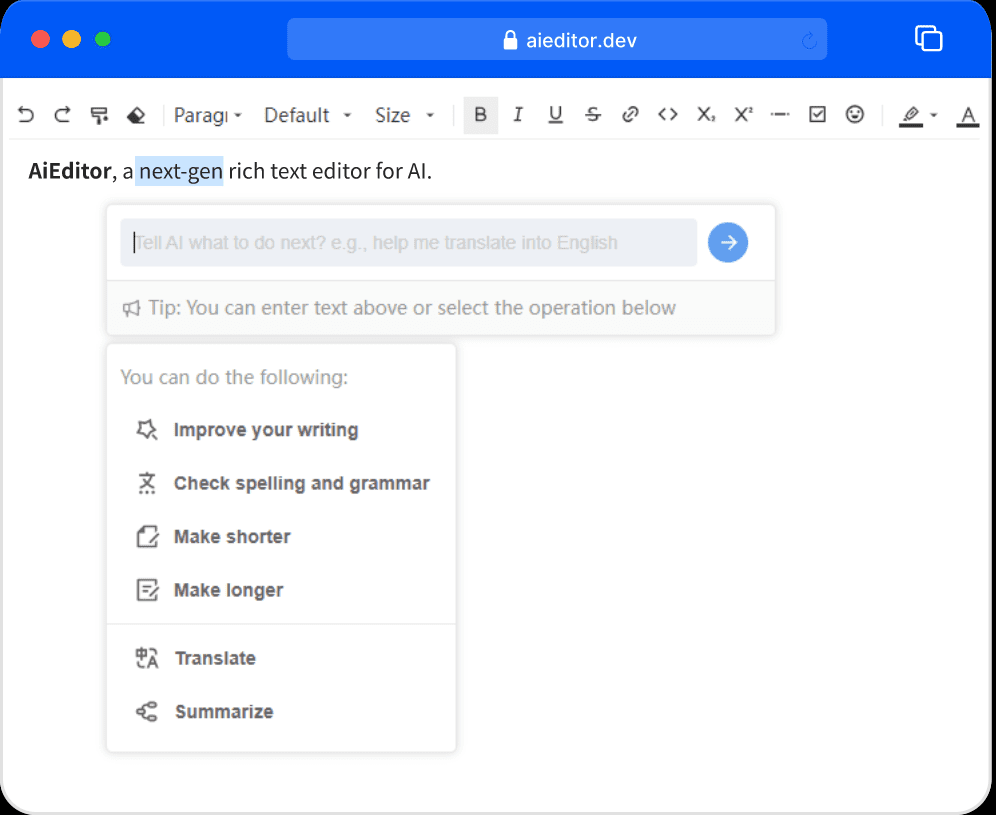

- Image to Video Generation

The user uploads a static image (e.g., an anime character drawing) and is prompted by text to generate an animated video. For example, input image as "ninja character", prompt as "ninja fighting in the forest", the model will generate the corresponding animation. Steps:- Prepare the image file (e.g. PNG/JPG format).

- Write detailed text prompts describing the action, scene and style.

- Runs an inference script that specifies the image path and prompts:

python inference.py --image_path input_image.png --prompt "忍者在森林中战斗" --output ninja_video.mp4 - Check the generated video files and adjust the cues to optimize the results.

- frame interpolation

The frame interpolation function is used to generate intermediate frames of the animation to improve video smoothness. Users can provide key frames (e.g. start frame and end frame) and the model automatically generates intermediate transition frames. Operation Steps:- Prepare keyframe images to be placed in the specified directory.

- Run the frame interpolation script:

python frame_interpolation.py --keyframe_dir keyframes --output interpolated_video.mp4 - Check the output video to make sure the transition between frames is natural.

- Localized image guided animation

The user can specify specific areas of the image to generate animations. For example, fixing the background and allowing only the character to move. Action Steps:- Provides an image and mask file (the mask specifies the animation area).

- Run the local boot script:

python local_guided_animation.py --image_path scene.png --mask_path mask.png --prompt "角色在背景前挥手" --output guided_video.mp4 - Check the output to make sure the animated area is as expected.

- Evaluating the use of datasets

The project provides an evaluation dataset of 948 animated video clips for testing model performance. Users need to apply for dataset access (same as model weight application process). After obtaining it, the evaluation script can be run:python evaluate.py --dataset_path anisora_benchmark --model_path anisoraV2_npuThe evaluation results include metrics such as action consistency and style consistency, which are applicable to model optimization.

Featured Function Operation

- Reinforcement Learning Optimization (RLHF)

AniSoraV1.0 introduces the first RLHF framework for anime video generation to improve the generation quality. Users can fine-tune the model with the provided RL optimization scripts:python rlhf_optimize.py --model_path anisoraV1_npu --data_path rlhf_data --output optimized_modelRLHF data should be prepared on your own, and is recommended to include high quality anime videos and ratings.

- Huawei Rise NPU Support

The project is optimized for Huawei Rise 910B NPU for domestic users. Make sure to specify the NPU device when running inference or training after installing the Rise Development Kit:python inference.py --device npu --model_path anisoraV2_npu

application scenario

- Production of animated short films

Creators can use Index-AniSora to generate high-quality animation clips. For example, enter a character design and the description "Character running in a futuristic city" to generate a short animated movie suitable for independent animation production. - VTuber Content Creation

VTuber anchors can use the model to generate animated videos of their avatars, such as a "virtual idol performing on stage," to quickly create live or short video content. - Comic Adaptation Preview

Cartoonists can convert static cartoon frames into motion video, preview the effects of the adapted animation, and verify the smoothness of the storyboard. - Education and Research

Researchers can use evaluation datasets and training codes to explore anime video generation techniques, optimize models or develop new algorithms.

QA

- How to get model weights and datasets?

Fill out the application form (PDF format) and send it toyangsiqian@bilibili.commaybexubaohan@bilibili.com. Get the download link after review. - Does it support non-Singularity hardware?

Yes, the project supports GPU environments, but Rise NPU performance is better. Make sure to install a compatible version of PyTorch. - What is the quality of the generated video?

The model is trained based on CogVideoX and Wan2.1, and combined with RLHF optimization, the generated video reaches an advanced level in terms of motion and style consistency, which is suitable for a variety of anime scenes. - Is programming experience required?

Basic Python knowledge is required to run the script. Non-developers can refer to the documentation to generate videos using pre-trained models.