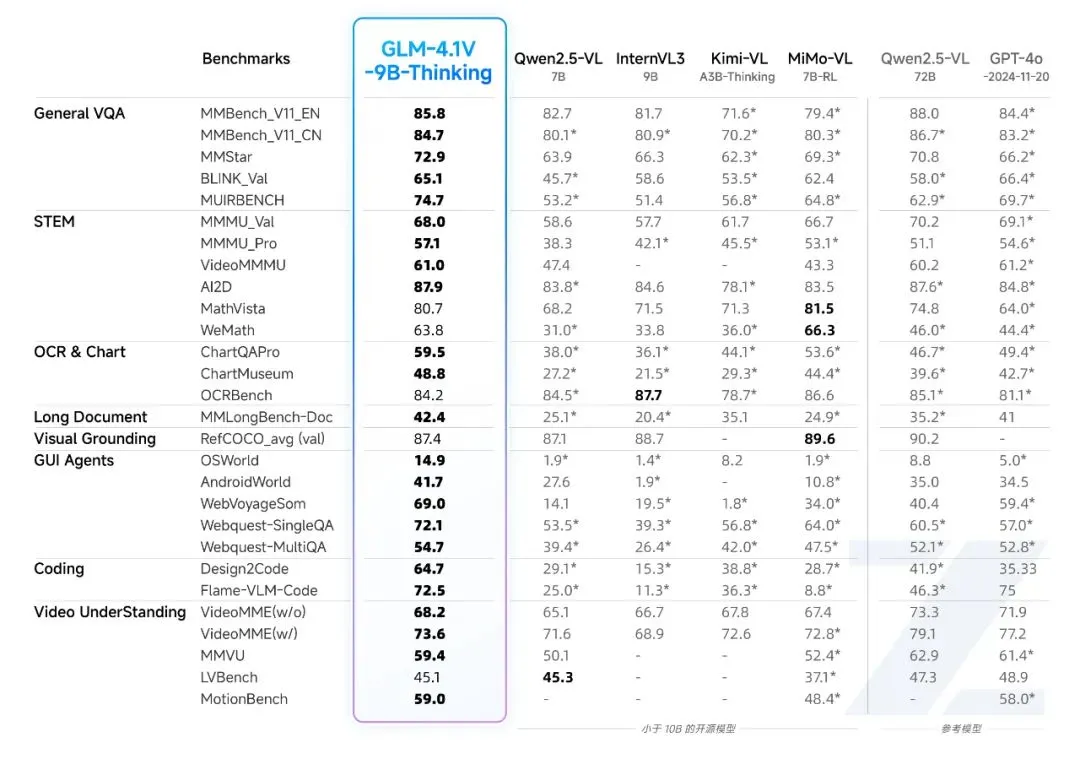

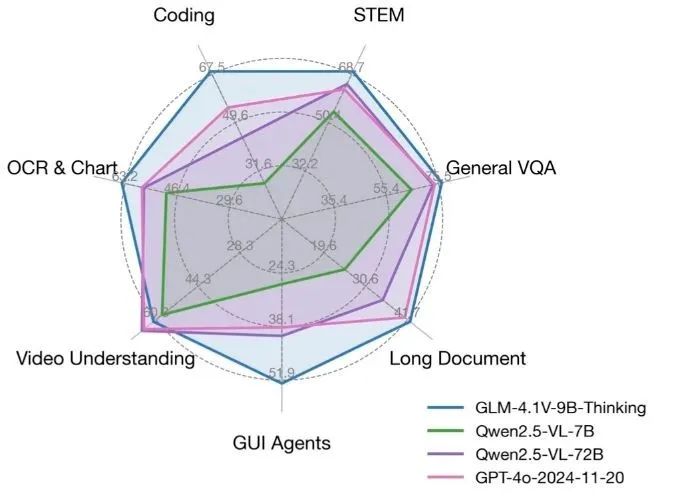

GLM-4.1V-Thinking is an open source visual language model developed by the KEG Laboratory at Tsinghua University (THUDM), focusing on multimodal reasoning capabilities. Based on the GLM-4-9B-0414 base model, GLM-4.1V-Thinking significantly improves the processing capability of complex tasks through reinforcement learning and "chain of thought" reasoning mechanism. It supports 64k ultra-long context, 4K high-resolution image processing, and is compatible with arbitrary image aspect ratios, as well as bilingual support for English and Chinese. The model excels in tasks such as math, code, long document comprehension, and video reasoning, and in some reviews even outperforms GPT-4o. The code and model are available on GitHub under the MIT license, allowing free commercial use for developers, researchers, and enterprises.

Function List

- Support for very long 64k contexts to handle long documents or complex conversations.

- Handles 4K high-resolution images and supports arbitrary aspect ratios.

- Provides bilingual support in English and Chinese, suitable for multi-language scenarios.

- Integrate "chain of thought" reasoning mechanisms to improve accuracy in math, code, and logic tasks.

- Supports video reasoning to analyze video content and answer related questions.

- Open source code and models, based on the MIT license, allow free commercial use.

- Hugging Face and ModelScope online demos are available for quick experience of modeling capabilities.

- Supports running on a single 3090 graphics card for resource-constrained development environments.

Using Help

Installation and Deployment

GLM-4.1V-Thinking provides complete code and model files with an easy deployment process for developers to run locally or on a server. The following are detailed installation and usage steps:

1. Environmental preparation

It needs to be run in a GPU-enabled environment; an NVIDIA graphics card (such as the RTX 3090) is recommended. Ensure that Python 3.8 or above is installed, as well as PyTorch. Here are the steps to install the dependencies:

pip install git+https://github.com/huggingface/transformers.git

pip install torch torchvision torchaudio

pip install -r requirements.txt

If model fine-tuning is required, refer to finetune/README.md file, using the LLaMA-Factory toolkit. When fine-tuning, it is recommended to use the Zero3 strategy to ensure training stability and to avoid the zero-loss problem that may result from Zero2.

2. Download model

The GLM-4.1V-Thinking model can be downloaded from the Hugging Face or GitHub repositories. Run the following code to load the model:

from transformers import AutoProcessor, Glm4vForConditionalGeneration

import torch

MODEL_PATH = "THUDM/GLM-4.1V-9B-Thinking"

processor = AutoProcessor.from_pretrained(MODEL_PATH, use_fast=True)

model = Glm4vForConditionalGeneration.from_pretrained(

pretrained_model_name_or_path=MODEL_PATH,

torch_dtype=torch.bfloat16,

device_map="auto"

)

Model Support bfloat16 format, lower memory footprint, and suitable for single GPU operation.

3. Single-image reasoning

GLM-4.1V-Thinking supports reasoning tasks with image input. Below is a simple example of an image description:

messages = [

{

"role": "user",

"content": [

{"type": "image", "url": "https://example.com/sample_image.png"},

{"type": "text", "text": "描述这张图片"}

]

}

]

inputs = processor.apply_chat_template(

messages, tokenize=True, add_generation_prompt=True, return_dict=True, return_tensors="pt"

).to(model.device)

generated_ids = model.generate(**inputs, max_new_tokens=8192)

output_text = processor.decode(generated_ids[0][inputs["input_ids"].shape[1]:], skip_special_tokens=False)

print(output_text)

commander-in-chief (military) sample_image.png Replace with the actual image URL or local path. The model analyzes the image and generates a detailed description.

4. Video reasoning

GLM-4.1V-Thinking supports video content analysis. Users can upload a video file via sample code in a GitHub repository or an online demo platform (e.g., Hugging Face), and the model will parse the video and answer relevant questions. For example, if you upload a video of a meeting and ask "what topics were discussed in the video", the model will extract the key information and generate an accurate answer.

5. Long document comprehension

The model supports 64k long contexts, making it suitable for processing long documents. Users can input text into the model and ask for specific content or summarize key points in the document. For example, enter a 50-page academic paper and ask "what are the main conclusions of the paper", the model will quickly extract and summarize.

6. Online presentation

No local deployment is required and can be experienced directly through an online demo provided by Hugging Face or ModelScope. Visit the links below:

- Hugging Face demo:

https://huggingface.co/THUDM/GLM-4.1V-9B-Thinking - ModelScope demo:

https://modelscope.cn/models/THUDM/GLM-4.1V-9B-Thinking

Users can upload images, videos or enter text to quickly test the model's reasoning.

7. Fine-tuning the model

Developers can use the LLaMA-Factory toolkit to fine-tune the model to fit specific tasks. The fine-tuning configuration file is located in the configs/lora.yaml, run the following command to start fine-tuning:

cd finetune

python finetune.py data/YourDataset/ THUDM/GLM-4-9B-0414 configs/lora.yaml

Ensure that the dataset is formatted correctly, JSON format is recommended. After fine-tuning, the model can be better adapted to domain-specific tasks, such as medical image analysis or legal document processing.

Featured Function Operation

- chain-of-minds reasoning: The model breaks down complex problems through a "chain of thought" mechanism. For example, in a math task, the model derives the answer step-by-step to ensure an accurate result. Users enter "Solve the quadratic equation x² + 2x - 3 = 0" and the model outputs detailed steps to solve the problem.

- multimodal support: Users can enter both images and text. For example, if you upload a circuit diagram and ask "How does the circuit work?", the model will combine the image and the question to generate a detailed explanation.

- Chinese-English bilingual: The model supports mixed Chinese and English input, which is suitable for cross-language scenarios. For example, input a question in Chinese and an image description in English, and the model will answer in the specified language.

caveat

- Ensure that the GPU has enough memory, at least 24GB of video memory is recommended.

- Enable YaRN configuration to optimize performance for long context processing with the configuration file

config.jsonhit the nail on the head"rope_scaling": {"type": "yarn", "factor": 4.0}The - Model inference is hardware-dependent for speed, and the 3090 graphics card enables real-time response.

application scenario

- academic research

Researchers can use GLM-4.1V-Thinking to analyze long academic papers and extract key conclusions or summary content. The model can also process experimental images and assist in analyzing data graphs. - Educational support

Students can upload pictures of math problems or science experiments, and the model will provide detailed steps to solve the problems or explanations of the experiments, making it suitable for self-study or teaching assistance. - content creation

Creators can input video or image footage to generate descriptive text or creative scripts. For example, enter a travel video to generate an attraction description. - enterprise application

Businesses can use the model for document automation, such as analyzing contract terms or generating reports. Bilingual support in English and Chinese is suitable for multinational organizations.

QA

- What input types are supported by GLM-4.1V-Thinking?

The model supports image, video, and text inputs, and is compatible with 4K images and 64k contexts for multimodal tasks. - Is high performance hardware required?

Runs on a single RTX 3090 graphics card, 24GB of video memory recommended for smooth reasoning. - How do I fine-tune my model?

Using the LLaMA-Factory toolkit, refer to the GitHub repository for thefinetune/README.mdfile, configure thelora.yamlFine-tuning. - Are the models free?

Yes, the model is open source based on the MIT license, which allows free commercial use. - How do I experience the model?

Quickly test model functionality by uploading images or text via Hugging Face or ModelScope's online demo.