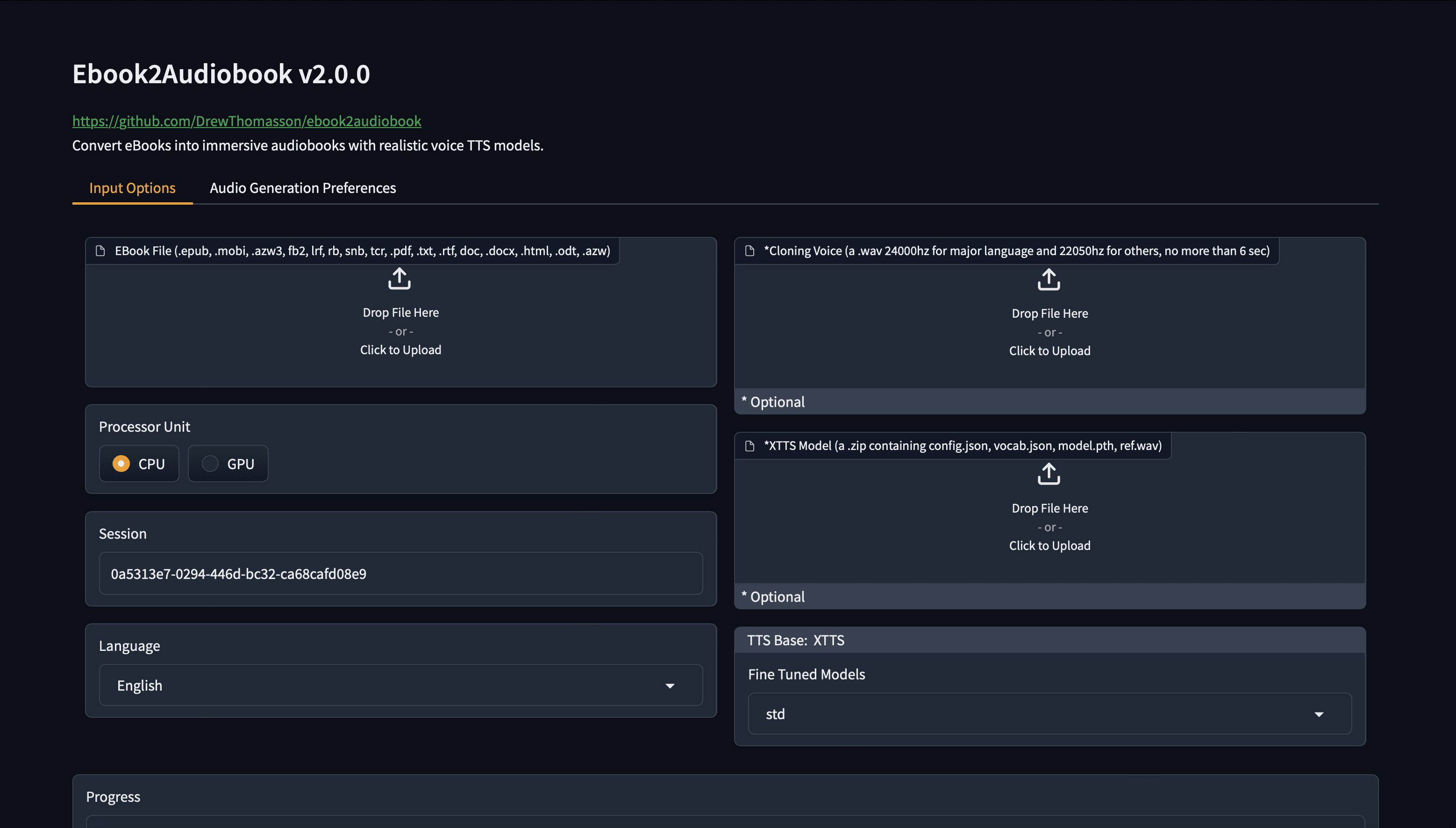

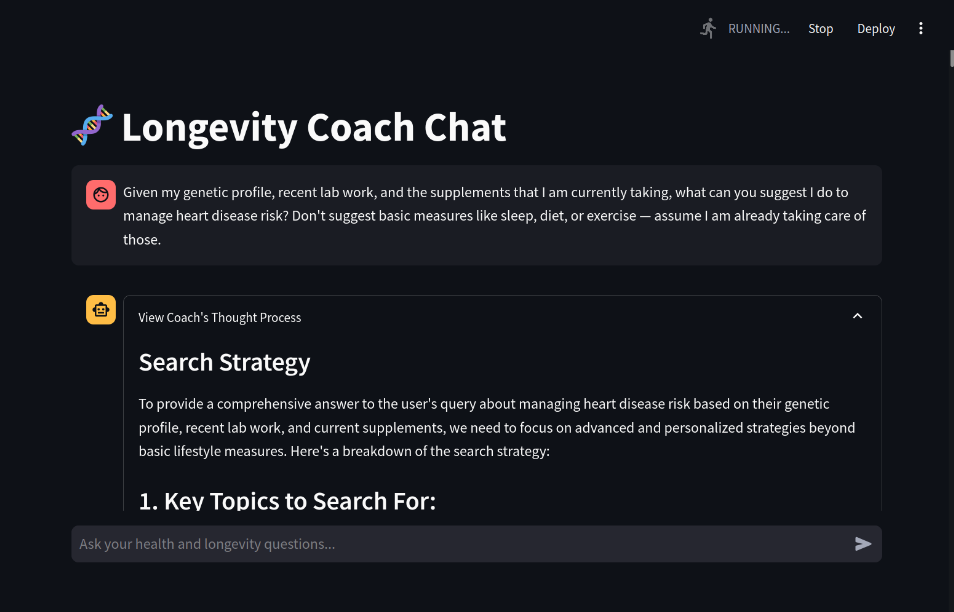

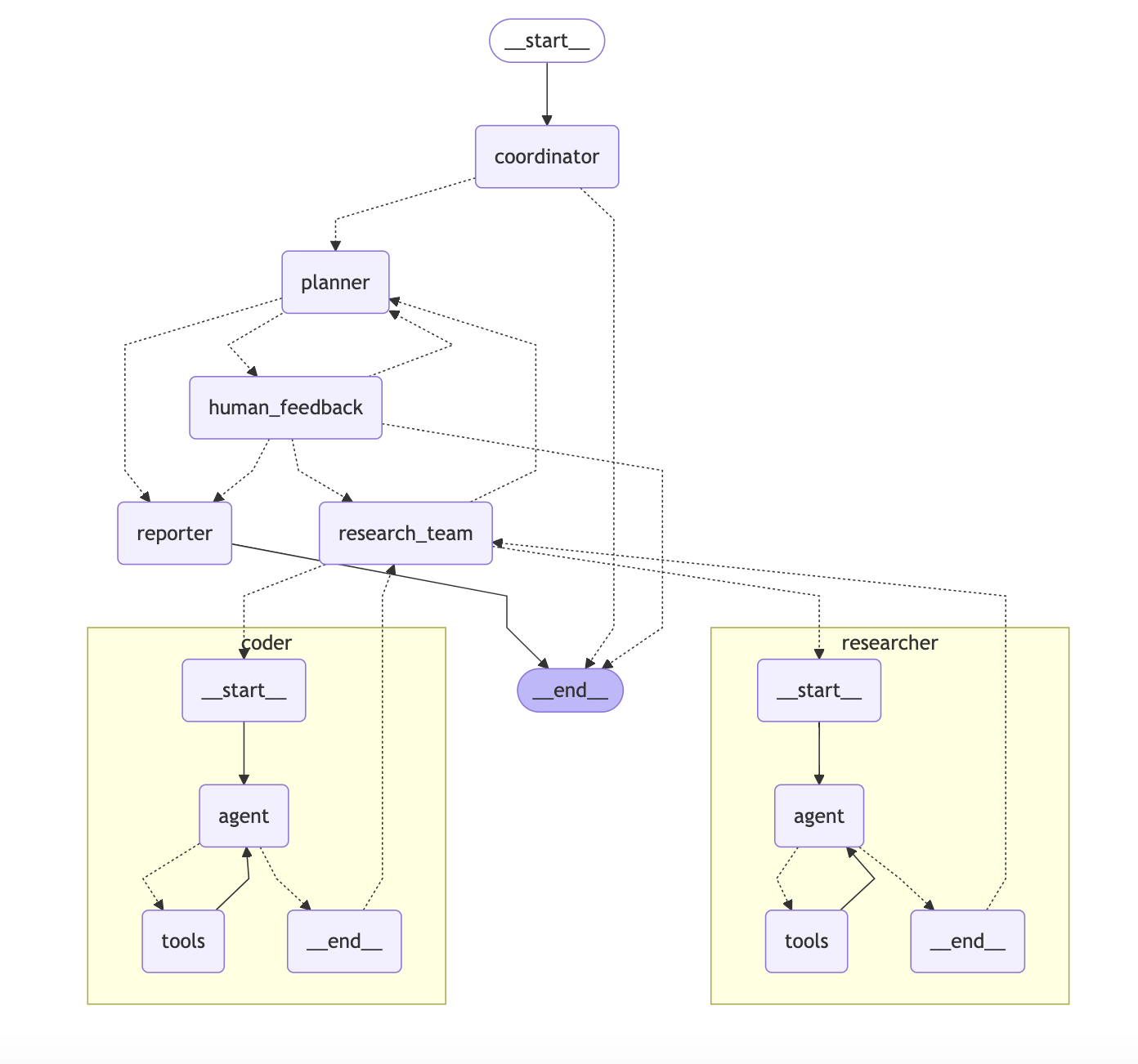

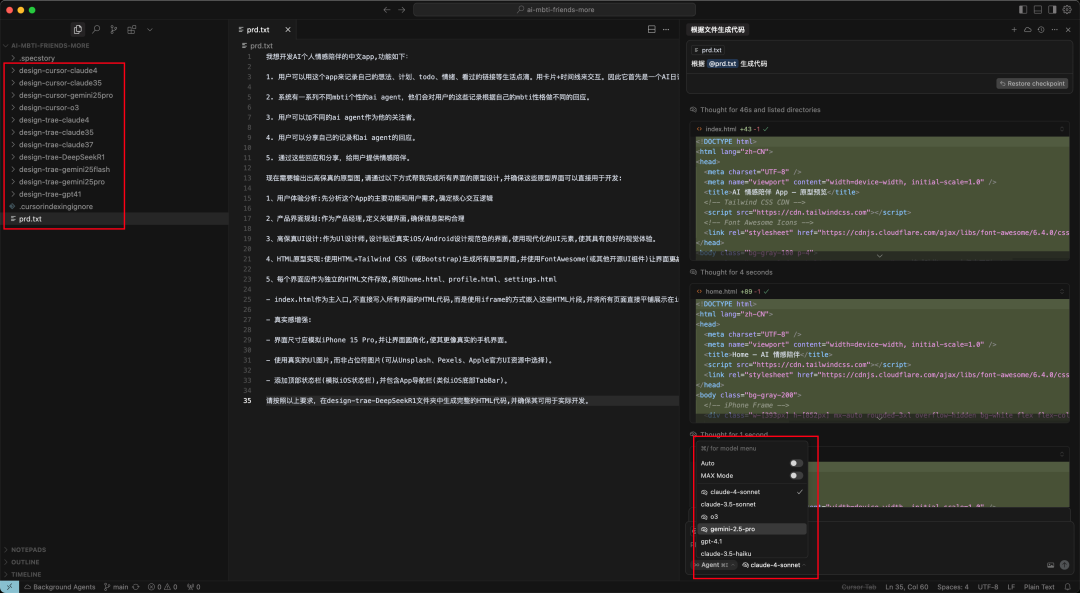

gemini-fullstack-langgraph-quickstart Is an open source example project by Google to show how to use Google's Gemini model and LangGraph framework to build a full-stack intelligent research application . The front-end using React to provide a user interface , the back-end through the LangGraph-powered intelligent agents to complete complex research tasks . This project generates search queries based on user input, obtains web page information through Google search API, analyzes information gaps and iteratively optimizes them, and finally outputs detailed answers with citations. The project is suitable for developers to learn how to combine large language models and open source frameworks to build an AI application that supports dynamic research and dialog. It emphasizes a transparent research process and is suitable for scenarios that require in-depth research or verification of information.

Function List

- Dynamic query generation: Based on user input, the Gemini model generates relevant search queries.

- Web Information Retrieval: Get real-time web data via the Google Search API.

- Reflection and Optimization: Agents analyze search results, identify information gaps, and iteratively optimize queries.

- Answer Synthesis and Citation: Synthesize the collected information to generate detailed answers with web citations.

- full-stack architecture: React front-end provides the interactive interface, FastAPI back-end handles the complex logic.

- Command Line Research: Support for quickly running a single research task from the command line.

- Real-time streaming output: Real-time data streaming for background tasks using Redis.

- State persistence: The Postgres database stores agent state, threads, and long-term memory.

Using Help

Installation process

To usegemini-fullstack-langgraph-quickstart, the following installation steps need to be completed to ensure that the environment is configured correctly. The following is a detailed installation and runtime guide:

- Cloning Project Warehouse

Run the following command in the terminal to clone the project locally:git clone https://github.com/google-gemini/gemini-fullstack-langgraph-quickstart.git cd gemini-fullstack-langgraph-quickstart - Getting the API key

- Gemini API Key: AccessGoogle AI Studio, register and generate a Gemini API key.

- LangSmith API Key(Optional): AccessLangSmith official website, register and generate keys for debugging and observation.

- Configuring Environment Variables

go intobackenddirectory, create.envfile and add the API key:cd backend echo 'GEMINI_API_KEY="YOUR_ACTUAL_API_KEY"' > .env echo 'LANGSMITH_API_KEY="YOUR_ACTUAL_LANGSMITH_API_KEY"' >> .envcommander-in-chief (military)

YOUR_ACTUAL_API_KEYreplacing it with the actual Gemini API key.YOUR_ACTUAL_LANGSMITH_API_KEYReplace with LangSmith key (if used). - Installing back-end dependencies

existbackenddirectory, install the Python dependencies:pip install .Ensure that the Python version is 3.8 or above and that the

pipPoints to the correct version. - Installing front-end dependencies

Return to the project root directory and go tofrontenddirectory, install the Node.js dependencies:cd ../frontend npm install - Running the development server

- Running both front and back ends at the same time: Start the front-end and back-end development servers by running the following command in the project root directory:

npm run devThe front-end runs by default in the

http://localhost:5173/appThe back-end API runs on thehttp://127.0.0.1:2024The - Running a separate backend: in

backenddirectory to run:langgraph devThis will start the backend service and open the LangGraph UI.

- Running the front end alone: in

frontenddirectory to run:npm run dev

- Running both front and back ends at the same time: Start the front-end and back-end development servers by running the following command in the project root directory:

- Deployment with Docker (optional)

If you wish to run it through Docker, you need to install Docker and Docker Compose first. and then execute it:docker build -t gemini-fullstack-langgraph -f Dockerfile . GEMINI_API_KEY=<your_gemini_api_key> LANGSMITH_API_KEY=<your_langsmith_api_key> docker-compose upOnce the application is running, access the

http://localhost:8123/appView Interface.

Functional operation flow

1. Research through the web interface

- Access to the front end: Open your browser and visit

http://localhost:5173/appThe - Enter a query: Enter a research question in the input box, e.g. "Recent trends in renewable energy in 2025".

- View the research process: After submission, the interface displays each step of the agent's operation, including generating queries, searching web pages, analyzing results, etc.

- Get Answers: The final answer will be displayed in text form, containing a link to the cited page.

2. Quick research through the command line

- exist

backenddirectory to run:python examples/cli_research.py "你的研究问题" - The agent will output the study results and citations directly at the endpoint, which is suitable for quick validation.

3. Real-time streaming output

- The project uses Redis as a publish-subscribe broker to ensure real-time output of background tasks. Need to install Redis locally and make sure it is running.

- Configuring Redis: In the

.envfile to add Redis connection information (the default is to use theredis://localhost:6379). - The streaming output displays the progress of the study in real time on a web interface or terminal.

4. Data persistence

- The project uses Postgres to store agent state and thread information. You need to install Postgres locally and configure the connection:

echo 'DATABASE_URL="postgresql://user:password@localhost:5432/dbname"' >> .env - The database records the context of each study for long-term memory and status management.

caveat

- Make sure the internet connection is stable, as the agent needs to get real-time data through the Google Search API.

- If you encounter an API key error, check the

.envfile is properly configured. - The project requires a lot of computing resources and is recommended to run on a better performing device.

application scenario

- academic research

Researchers can use the app to quickly gather the latest information in a particular field. For example, type in "recent advances in quantum computing" and the agent will generate relevant queries, search academic resources and organize answers with links to reliable sources. - business intelligence analysis

Business analysts can use the tool to study market trends or competitor dynamics. For example, enter "Electric Vehicle Market Trends 2025" and the application will provide detailed reports and data sources. - Educational aids

Students and teachers can use the tool to quickly verify information or prepare course materials. For example, typing in "impacts of climate change" provides a synthesized answer with citations that is suitable for classroom discussion. - Technology Development Reference

Developers can learn how to combine the Gemini model and the LangGraph framework to build similar research agents and explore AI-driven application development.

QA

- What API keys are needed to run the project?

A Gemini API key must be provided, available from Google AI Studio.The LangSmith API key is optional and is used for debugging and observation. - How can I view the agent's research process?

In the web interface, after submitting a query, the interface displays every step of the agent's operation in real time, including query generation, web search and reflection process. - What programming languages does the project support?

Python (LangGraph and FastAPI) for the backend and JavaScript (React) for the frontend.Docker for deployment. - Does it support offline operation?

Full offline operation is not supported, as the agent needs to get live web data through the Google Search API.