Claude Proxy is an open source proxy tool, hosted on GitHub, designed to help developers convert Anthropic's Claude API requests to the OpenAI API format. It allows users to work seamlessly with Claude models through simple configuration, and is compatible with both client and front-end tools supported by OpenAI. The tool is suitable for developers who need to invoke large language models across platforms, providing flexible model mapping and streaming response support. The project is maintained by tingxifa and is implemented using Bash scripts, which are easy to configure and suitable for rapid deployment to production environments. Users only need to set the API key and proxy address, you can call Claude model through the command line, the operation is intuitive and efficient.

Function List

- Convert Claude API requests to OpenAI API format, compatible with OpenAI supported clients.

- Streaming and non-streaming responses are supported, ensuring full compatibility with Claude clients.

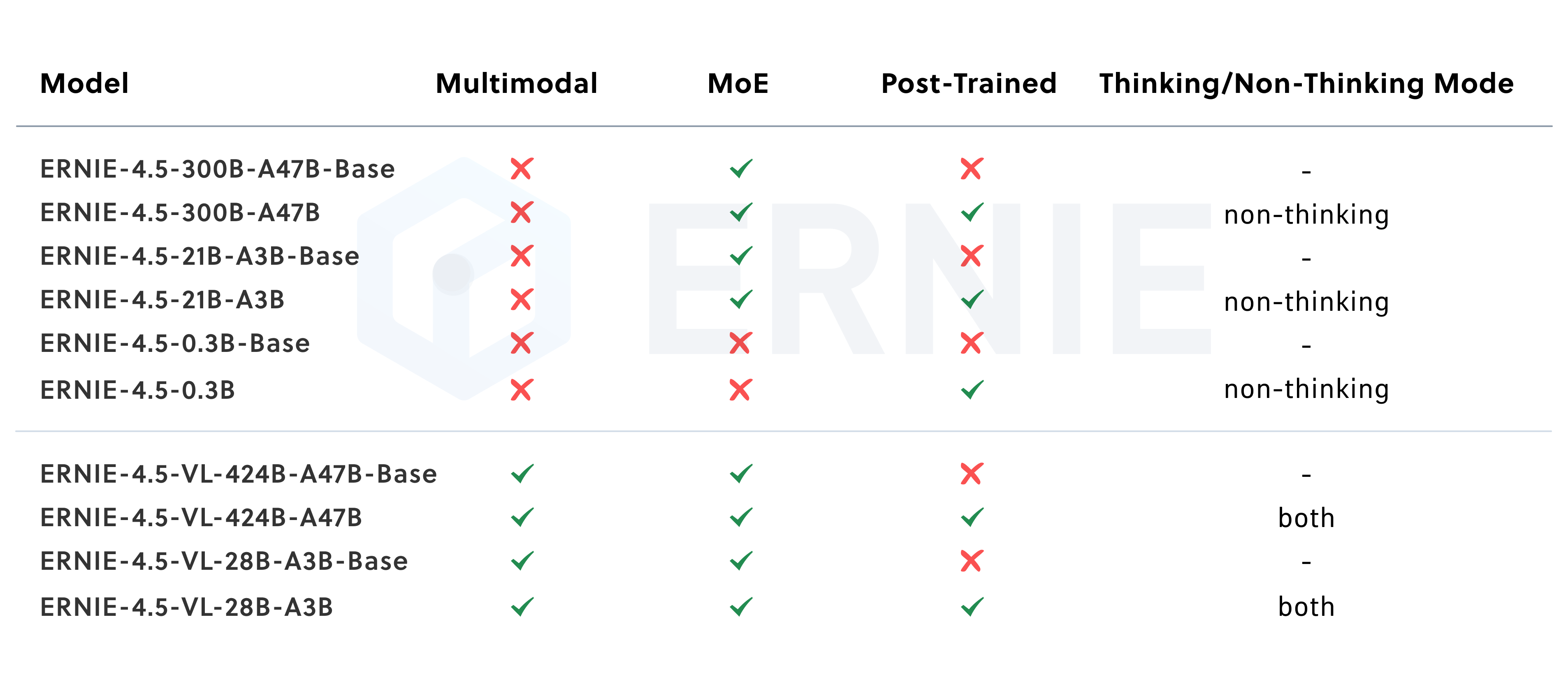

- Provides flexible model mapping with customizable model names (e.g.

gemini-1.5-pro). - Supports rapid deployment of proxy services via Bash scripts.

- Allow users to configure API keys and proxy service addresses, adapting to multiple backend models.

- Open source free, transparent code for developers to customize and extend.

Using Help

Installation process

To use Claude Proxy, users need to follow the steps below to configure and run the project locally or on a server. Below is a detailed installation and usage guide:

- Cloning Project Warehouse

Open a terminal and run the following command to clone the project locally:git clone https://github.com/tingxifa/claude_proxy.gitOnce the cloning is complete, go to the project directory:

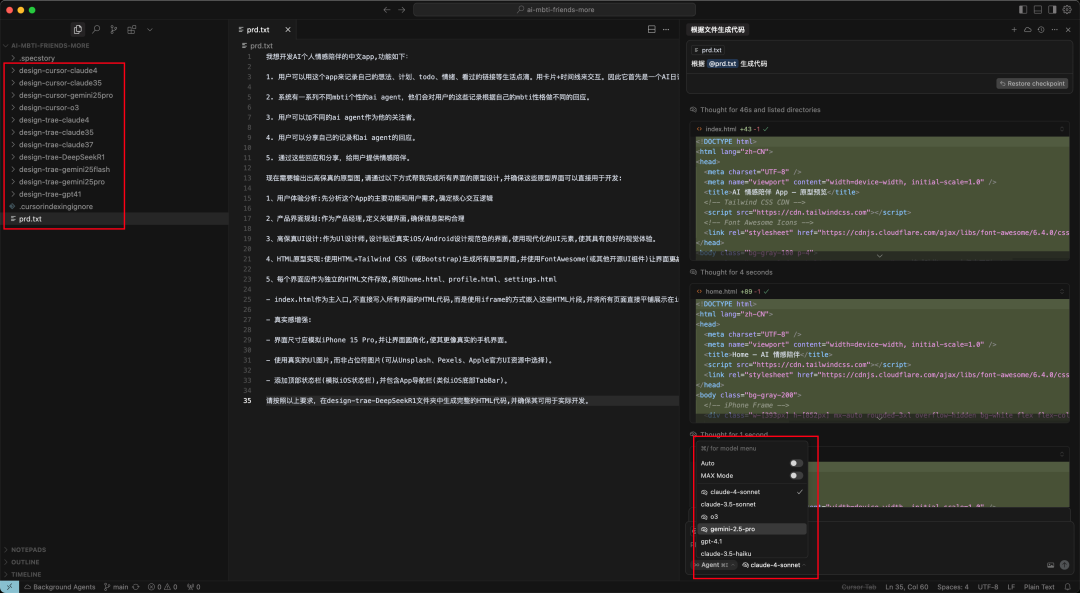

cd claude_proxy - Edit Configuration File

In the project directory, find theclaude_proxy.shscript file, using a text editor (such as thenanomaybevim) Open:nano claude_proxy.shFind the part of the script labeled "Highlights: What to Replace" and change the following variables:

API_KEY: Replace it with your API key, for example:API_KEY="your_api_key_here"OPEN_AI_URL: Setting the proxy service address (withouthttps://), for example:OPEN_AI_URL="api.example.com/v1"OPEN_MODEL: Specify the model name, for example:OPEN_MODEL="gemini-1.5-pro"

Save and exit the editor.

- Adding Execution Privileges

Add executable permissions to the script, run it:chmod +x claude_proxy.sh - Running Agent Service

Run the script to start the agent service:./claude_proxy.shUpon successful startup, the proxy service will begin listening in preparation for processing Claude API requests.

Usage

Once the configuration is complete, users can invoke the Claude model via the command line or a client that supports the OpenAI API. Below is the procedure:

- command-line call

In a terminal, use thecurlcommand to test the proxy service. Example:curl http://localhost:port/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer your_api_key_here" \ -d '{ "model": "gemini-1.5-pro", "messages": [ {"role": "system", "content": "你是一个智能助手"}, {"role": "user", "content": "请告诉我今天的天气"} ] }'The above command sends a request to the agent, which converts the Claude API to OpenAI format and returns a response.

- Using front-end tools

Claude Proxy is compatible with front-end tools that support the OpenAI API (such as LibreChat or SillyTavern). In the front-end configuration, set the API address to the URL of the proxy service (e.g.http://localhost:port/v1) and enter your API key. After that, the front-end tool can call the Claude model through the proxy. - Streaming Response Support

If real-time streaming responses are required (such as in a chat application), make sure the front-end or client supports Server-Sent Events (SSE). The agent automatically handles streaming data to maintain compatibility with Claude clients.

Featured Function Operation

- model mapping

Users can customize the model mapping according to their needs. For example, mapping Claude's Haiku model to thegpt-4o-minior mapping a Sonnet model to thegemini-1.5-proThe ModificationsOPEN_MODELvariables can be realized. Example:OPEN_MODEL="gpt-4o"After re-running the script, the agent processes the request using the specified model.

- Multi-Backend Support

Claude Proxy supports connecting to multiple backend models (e.g. OpenAI, Gemini or local models). By modifying theOPEN_AI_URLcap (a poem)API_KEYFor example, to connect to a local Ollama model. For example, connecting to a local Ollama model:OPEN_AI_URL="http://localhost:11434/v1" API_KEY="dummy-key" - Debugging and Logging

When running the script, the agent outputs a log to the terminal showing details of requests and responses. If you encounter problems, check the log to locate errors, such as an invalid API key or a wrong model name.

caveat

- Ensure that the network environment is stable and that the proxy service requires access to external APIs.

- API keys need to be stored properly to avoid leakage.

- If using a local model (e.g. Ollama), make sure the local server is up and listening on the correct port.

With the above steps, users can quickly configure and run Claude Proxy to seamlessly convert Claude API to OpenAI API, which is suitable for a variety of development scenarios.

application scenario

- Cross-Platform Model Calling

Developers wishing to use Claude models in tools that support the OpenAI API, Claude Proxy converts API requests to OpenAI format, allowing users to call Claude without modifying front-end code. - Local Development Testing

Test the performance of Claude models in your local environment. Developers can configure agents to connect to local models such as Ollama for low-cost development and debugging. - Chat Application Integration

Chat app developers want to integrate the Claude model, but the front-end only supports the OpenAI API. Claude Proxy provides a proxy service to ensure that real-time chatting runs smoothly. - Model switching and optimization

Organizations need to switch between models based on cost or performance requirements (e.g., from Claude to Gemini), and Claude Proxy's model mapping capabilities allow for quick switching and reduced development costs.

QA

- What models does Claude Proxy support?

Support for any OpenAI-compatible model, such asgpt-4o,gemini-1.5-proetc. Users can use theOPEN_MODELVariable customization model. - How to deal with API key leaks?

If the key is compromised, replace the API key with a new one immediately andclaude_proxy.shMedium UpdateAPI_KEYVariables. - Does the proxy service support streaming responses?

Yes, Claude Proxy supports streaming and non-streaming responses and is compatible with all Claude client SSE features. - Do I need an Anthropic API key?

Not necessarily. If you are using another backend (such as OpenAI or Gemini), just provide the API key for the appropriate backend.